Automation is one of the key elements of a modern Security Operation Center. In a traditional SOC without any automation, analysts have to spend a lot of time on tedious and repetitive tasks. This is really inefficient in multiple ways. The analysts can’t use their skills, they must do something that a simple program could do as well. Also, doing things manually can significantly increase the time between starting an investigation and successfully resolving an incident. The more time a malware/attacker can spend in a network, the more damage it can do. So decreasing the response time is inevitable if you want to keep your network secure.

In some cases, even a centralized Incident Response Automation solution can’t provide the necessary speed and performance. In this post, I’m going to show a problem that can be hard to properly address with a slower solution. The idea and the code I describe is not a full-fetched product, it is just an interesting concept based on free tools, like Powershell and Sysmon, which could be implemented in a home network or at smaller companies.

Latency in Incident Response Automation

We can differentiate between two types of SIEMs based on their latency:

-

Event-driven SIEM: When the tool gets a log, it pushes it through a rule pipeline. In this pipeline, the single event is going to be tested against every rule. If it matches, the SIEM creates an alert or mark the event for further processing. The benefit of this SIEM is its low latency. When the event arrives, it is immediately tested against the rules, it doesn’t have to wait for anything. QRadar is an event-driven SIEM.

-

Schedule / Time / Query-driven SIEM: The searches in a SIEM like this are stored as queries and they are executed based on the defined schedule. An incoming log will be stored by the SIEM, but nothing else will happen with it initially. When the time comes (based on the schedule) the search is executed and it will look for matches. We can see that no matter how you schedule your query, the logs are going to wait for some time before they are looked at. You can decrease the time between schedules, but this is not always a good solution. For some queries, you must check the logs from the last hours or even days. Executing a search that checks the last day every minute just to make the latency lower is going to overload your SIEM. One of the well-known schedule-based SIEM is Splunk.

(Both types have their benefits and drawbacks, but I’m only focusing on the latency attributes now.)

During my job, I frequently do some Detection/Automation engineering tasks. This also means I see how queries are scheduled in the systems. I encountered some rules already which were timed to be executed every 10-15 minutes, but these are rare. Most of the rules are scheduled to be executed once every 1-3 hours. The rarer ones are not even worth mentioning here, we can immediately understand their latency implications.

One also has to consider that logs are not arriving into the SIEM at the time of their creation. Sometimes they are sent to the SIEM in a scheduled, bulky way from the end system. Sometimes the first stop is not even a SIEM but an intermediary system that is used to collect, store, distribute, or do some calculation on the data. This introduces some additional minutes between the log creation and its processing by a rule.

Let’s imagine a scenario in which we detect a malware and then we try to collect additional information from the system (for example the binary of the detected malware). First, the logs are going to be generated on the system. Then we are going to forward the logs to a SIEM or to an intermediary system (few minutes latency). Then we are waiting for the rules to be executed (additional 10-15 minutes at least). At this point, our rules can detect the infection so we can start the information collection process. Our Automation service is going to start to connect to the machine or to an agent on the machine and ask for the information (again some minutes). During this time the malware on the system can do anything it wants to do.

If there is no automation, most of the steps must be done by an analyst manually. This can increase the few minutes to even hours. Helping the analyst by enriching the incident ticket with information, doing some preliminary steps can decrease the IR time. However, sometimes even with automation, this period is way too long.

Ransomware example

In my previous blogpost I have already explained how fast a ransomware can act on a machine. In only 10-15 minutes it can encrypt the whole machine. Even when it takes more time for it to finish it still does a lot of harm in the first few minutes. When an infection can do a lot of harm in 15 minutes then having a 30+ minute long period between the action and automated remediation is not sufficient.

Recently (2 months ago) I found out that Sysmon can detect the file removal actions as well, and the deleted files can be archived automatically by Sysmon. This gave me the idea to create a quick detection solution that can run on a machine and execute some IR process to decrease the harm done on the system.

The idea can be further fine-tuned, additional detection options or IR actions can be defined. This is just a skeleton and some scripts one can use to implement their own solution. (I haven’t used any GUI in my test, but it can be easier for somebody else to do it that way. But I’m going to provide code and explanation for each step I made.)

Detection and Response

Below, I’m listing the high-level steps I made and the logic behind the detection and response. Later I’m going to go into details for every step and explain why I used the methods the way I did.

- Configure and install Sysmon. For this Proof of Concept, you only have to configure the delete event logging and the file archive function (or additionally the file create one as well).

- Configure the permission of the archive folder

- Configure key-based authentication for scp.

- Download procdump. Going to use this tool to gather the memory dump of the suspicious processes.

- Create a honeytoken (honeyfile). We are going to detect the malicious activity based on honeyfile access.

- Create a cleaner scheduled task that is going to remove the files from the archive folder from time to time.

- Create a detection scheduled task that triggers on honeytoken access and triggers the response action.

- Create a response scheduled task that is going to dump the memory of the suspicious process and then kills the process.

- Create a backup scheduled task that backs up the files from the archive folder to the remote server.

1. Install and configure Sysmon

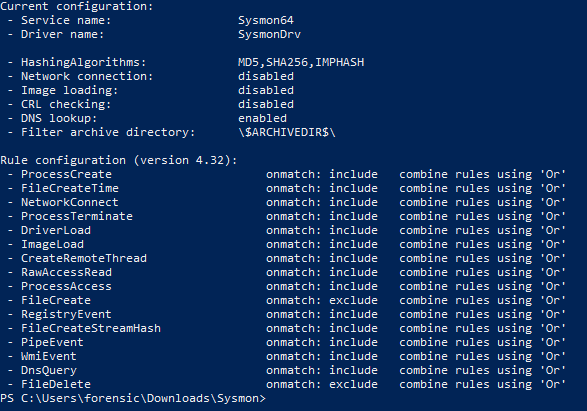

You can download and install Sysmon from its official site. The installation process is pretty straightforward:

.\Sysmon64.exe -i config_file.txt -accepteula

You can configure your config file however you want (but I will show the relevant part of my config later on). To be able to detect honeypot usage by a ransomware it is worth turning on the EventID 11 (File Creation) and EventID 23 (File Deletion) events. It is important to point out here that Sysmon is not the best way to configure a honeyfile. While creating and deleting a file is logged by Sysmon but modifying or opening a file for read is not detected. However, most of the ransomwares will create some File Creation or File Deletion event (not all of them though), so using Sysmon will work for us.

In a later step, I’m going to configure a scheduled task to trigger when this honeytoken is touched. The filtering solution in the scheduled task (and in event viewer) has a big flaw, unfortunately.

Microsoft uses a limited version of XPath for filtering, which does not support wildcards. My original idea was to trigger the schtask if any file is created/touched in the “honeyfolder”. The log that is created contains the full path, including the filename. The “honeyfolder” is a part of the full path, so I had to use a “contain” operator or a wildcard that says something like: C:\Folder\honeyfolder*. This way a newly created file with a full path of C:\Folder\honeyfolder\testfile will match the filter. Unfortunately, at this point only a complete match is supported.

Because of this, I decided to create this PoC only based on 1 file in the “honeyfolder”, so it will trigger on exact match. If you want to trigger on any file in a specific folder, there are two methods you can use in some other scenarios. They weren’t a good fit for me though:

- Configure Sysmon to only trigger on changes in the honeyfolder. Sysmon itself has more configuration options than the XPath filtering, so you can do this easily. On the other hand, in this scenario, I want to save every deleted file (to save it from the ransomware encryption) so I couldn’t configure my Sysmon to only watch 1 folder. In this project, I had to log every file deletion action.

- You can trigger on everything and later filter the required ones by Powershell. You can configure your scheduled task to trigger on every File Creation/File Deletion event not just the ones in the “honeyfolder”. When it triggers you can execute a Powershell script that checks whether any of action was done in the “honeyfolder”. You have more filtering capabilities in Powershell, but this also means you must push a ton of events through your PS script, which can be slow.

Beside the File Creation/Deletion logging, you also have to turn on the archiving functionality of Sysmon. This means every file is going to be saved into a Backup folder when they are deleted.

Sysmon config file (be aware that you also have to exclude any other logging, see on github):

<Sysmon schemaversion="4.32">

<HashAlgorithms>md5,sha256,IMPHASH</HashAlgorithms>

<ArchiveDirectory>SafeHaven</ArchiveDirectory>

<EventFiltering>

<RuleGroup name="EveryFileCreation" groupRelation="or">

<FileCreate onmatch="exclude">

</FileCreate>

</RuleGroup>

<RuleGroup name="EveryFileDeletion" groupRelation="or">

<FileDelete onmatch="exclude">

</FileDelete>

</RuleGroup>

</EventFiltering>

</Sysmon>

The HashAlgorithm tag defines the type of hashes that should be calculated for the files. It also defines how the archived files are going to be named, as their name is going to be the hashes we calculated for them concatenated together.

The ArchiveDirectory tag contains the name of the archive directory we want to use. The folder is going to be created on the C:\ folder, so in my case, it is going to be C:\SafeHaven. (To be precise it is going to be created on every partition, but I only created my scripts for the C: drive.)

An ACL is also going to be configured on it. Its owner is going to be SYSTEM and every other user will be prevented to access it. So, a normal user (or even an admin) won’t be able to access it or list the entries here. But the files copied here will keep their original ACL. For an attacker to remove/alert these files he has to modify the ACL on it, or he has to execute his code as NT AUTHORITY\SYSTEM user (which is not a trivial thing to do, not in silence at least).

In my test, I’m going to modify this ACL, so my user will have full access to the folder. This is NOT a good approach in a production environment, because a ransomware too will be able to delete the files from the archive folder this way. I configured it this way to make my test easier, but on a production system you should have a service user for backup purposes only and you should configure this folder to be accessible by that user.

The last and biggest part of the code is for logging. In this case, I configured the File Creation and File Deletion events to be logged every time. I used the exclude filter and then I defined nothing. By excluding nothing I practically included everything.

One thing to know is that file creation and deletion in the ArchiveDirectory won’t be logged.

Download the config file from github

2. SafeHaven ACL settings

The following script configures the folder to be accessible for my user. You should configure your backup user here if you have one.

$path = "C:\SafeHaven"

$Acl = Get-Acl $path

$Ar = New-Object System.Security.AccessControl.FileSystemAccessRule("WinForTest\WinForTest", "FullControl", "ContainerInherit,ObjectInherit", "None", "Allow")

$Acl.SetAccessRule($Ar)

$Acl.SetAccessRuleProtection($False,$True)

Set-Acl "C:\SafeHaven" $Acl Unfortunately, I still encountered some files which weren’t accessible by my user. Most of the time I want to backup the files of my own user and not System files and other objects. And the files of my user are always accessible.

3. SCP

I decided to use SCP to securely transfer the archived file to a backup server. I chose this tool because it is a built-in function in Windows. This way no external tools have to be installed for secure file copy and all of the binery I use will be signed by MS.

You need to configure key-based authentication for SCP. There is nothing tricky in this, so I’m not going to explain it in detail. In a later step, we are going to use the generated keys and the user,ip, and path to the remote server.

4. Download procdump

This is still part of the prerequisities. You only need it if you want to collect the memory dump of the identified suspicious process. As it was true previously, you can use any other tool you want to, but my scripts are based on procdump. Also, procdump is signed by MS which makes it a good choice in case you want to block the execution of some files based on the certificate signer.

The only thing you have to do is to download procdump (https://docs.microsoft.com/en-us/sysinternals/downloads/procdump) and put it into a folder you want to use later on. Remember the path to the file, because you have to put it into the scripts later. Potentially you can store it in a folder that is only accessible by the backup user. This way a normal attacker won’t have access to it.

5. Create the honeyfile

As I stated before in this PoC I’m going to create only one file which is going to be used as a honeyfile. If anything deletes this file, we are going to trigger an action. I’m also going to trigger if somebody creates this file. This latter one is mostly for showcase purposes, but it is also important to know that ransomwares can do multiple actions on a file or in a folder and some of these actions will result in a file creation event. (See some details in my previous post).

File Creation event and some of the File Overwrite actions create an event with ID 11 (File Creation). Some type of File Overwrites and the File Delete actions create an event with ID 23 (File Delete). So, both logs are useful for us. Additionally, a ransomware can create ransom notes in the “honeyfolder”, this action can be potentyially monitored too. (but in my test I do not use “honeyfolder”, only a file.)

Again, you can create this file anywhere you want. If you want to hide it from the user, you can put it into a directory a user rarely visits. Additionally, you can set the folder attributes (or file attributes) to be hidden and system files. Some ransomwares do not visit folders with system attributes and encrypt files with the same attribute so this can be risky.

Also, be aware that ransomware won’t encrypt every filetype. Executables, binaries are often left intact, so it is worth creating files with a different extensions. I chose to use a txt file as my honeyfile.

I created mine in the following path:

C:\Users\WinForTest\Ransomware\honeytokenfile.txt 6. SafeHaven_cleaner scheduled task

Sysmon saves every file on delete and stores them in the defined Archive Folder. Windows creates a lot of temp files which are then removed, and many files can also be deleted by the user. So, this folder can quickly fill up with “backup” files.

However, this is not a full-fetched backup solution and it shouldn’t be used as one. This means we don’t need these files normally. We only want to save them when we detect a malicious entity on the machine. So, to prevent this folder from occupying the whole disk, a scheduled task is going to remove every old file from time to time. I created a script that is going to register a new scheduled task. The scheduled task is executed every 15 minutes and remove the files older than 15 minutes.

This way, if a malware is detected, we can save the files from the last 15 minutes and every newly removed file after the deletion. When the ransomware runs on a machine, the honeytoken file or folder is not going to be the first encrypted one. With this 15 minute setting, I expect the ransomware to hit the honeytoken in 15 minutes. If this happens, the encrypted files could be saved, otherwise, some of them are going to be lost.

But here is the first issue I encountered during this test. When files are deleted and then copied by Sysmon into the archive folder, the creation date is going to be inherited from the original file. This way it is not possible to remove the files older than 15 minutes. Instead of this, I relied on the last access time timestamp, which was updated during this copy on delete operation. This is not an optimal solution but works for this scenario.

$jobname = "SafeHaven_cleaning"

$taskpath = "\Automation"

$action = New-ScheduledTaskAction -Execute 'Powershell.exe' -Argument 'cd C:\SafeHaven; Get-ChildItem -Force -File | ForEach-Object { Where-Object {$_.LastAccessTime -lt (Get-Date).AddMinutes(-15)}| remove-item $_ -Force -ErrorAction SilentlyContinue; remove-item $_ -Confirm:$false -ErrorAction SilentlyContinue;}'

$trigger = New-ScheduledTaskTrigger -Once -At (Get-Date).Date -RepetitionInterval (New-TimeSpan -Minutes 15)

$settings = New-ScheduledTaskSettingsSet -AllowStartIfOnBatteries -DontStopIfGoingOnBatteries –StartWhenAvailable -DontStopOnIdleEnd

Register-ScheduledTask -TaskPath $taskpath -TaskName $jobname -Action $action -Trigger $trigger -RunLevel Highest -User "WinForTest\WinForTest" $settings

In this code, I created a scheduled task called SafeHaven_cleaning. The script is stored in the \Automation scheduled task directory. It executes the defined PowerShell script every 15 minutes. The script goes through the files in the archive folder (C:\SafeHaven) and removes every file which was last accessed more than 15 minutes ago.

Based on my experience sometimes the Remove-Item command doesn’t work with the –Force argument and sometimes it does not work without it, so to make it easier I execute both options after each other. Can’t explain the issue though.

7. Honeytoken removal/encryption detection

The next step is to somehow detect if the honeytoken is accessed. For this, I’m also going to create a scheduled task, but in this case, it is going to trigger if Sysmon creates an Event with ID 11 (File Creation) or ID 23 (File Deletion). When a ransomware acts on a file, it can do multiple actions. Some of them are not logged by Sysmon while some of the other actions can be logged as Event ID 11 or Event ID 23.

I’m using a different way to create a scheduled task here. Creating one that triggers on events can’t be done the same way we did with the time-scheduled one. (Download the code from github) The first part of the code defines the event I want to trigger. The query language we have to use is not too straightforward, but you can use my script and then just replace the path to the honeytoken file.

$class = cimclass MSFT_TaskEventTrigger root/Microsoft/Windows/TaskScheduler

$trigger = $class | New-CimInstance –ClientOnly

$trigger.Enabled = $true

$trigger.Subscription = '

<QueryList>

<Query Id="0" Path="Microsoft-Windows-Sysmon/Operational">

<Select Path="Microsoft-Windows-Sysmon/Operational">*[System[(EventID=11 or EventID=23) and TimeCreated[timediff(@SystemTime) <= 300000]]] and *[EventData[Data[@Name="TargetFilename"] and Data="C:\Users\WinForTest\Ransomware\honeytokenfile.txt"]]</Select>

</Query>

</QueryList> '

The second part of the code is the PowerShell script we want to execute if the script triggers. First, after triggering this task, the code is going to disable the cleaning script, so the backed up files are not going to be removed automatically anymore. Then a script is going to be started which can create a process dump of the suspicious process and kill the mentioned process. (This is a powershell script.)

Then it enables and starts the backup task. This task is going to upload the archived files to a remote server to make them safe and potentially accessible for investigation (for example the malware can delete its own binary, but this way it will be saved can be investigated).

$ActionParameters = @{

Execute = 'powershell.exe'

Argument = 'Disable-ScheduledTask -TaskPath "Automation" -TaskName "SafeHaven_cleaning";Stop-ScheduledTask -TaskPath "Automation" -TaskName "SafeHaven_cleaning";powershell -ExecutionPolicy Bypass C:\Users\WinForTest\Documents\scripts\5_1_procdumpandkill_script.ps1; Enable-ScheduledTask -TaskPath "Automation" -TaskName "DeletedFileArchive"; Start-ScheduledTask -TaskPath "Automation" -TaskName "DeletedFileArchive";'

}

And the last part of this code is to setup the script and register the scheduled task.

$Action = New-ScheduledTaskAction @ActionParameters

$Principal = New-ScheduledTaskPrincipal -UserId 'WinForTest\WinForTest' -LogonType ServiceAccount

$Settings = New-ScheduledTaskSettingsSet

$RegSchTaskParameters = @{

TaskName = 'Honeyfile access'

Description = 'Triggers when the honeyfile is removed or created'

TaskPath = '\Automation\'

Action = $Action

Principal = $Principal

Settings = $Settings

Trigger = $Trigger

}

Register-ScheduledTask @RegSchTaskParameters

8. ProcDump and Kill

This powershell script is executed after somebody touched the honeyfile we created. The script first queries the Windows events to find the events which triggered the other scheduled task. Then it extracts the process ID from the logs. By using the PID it can execute procdump to save the memory of the process (or processes) into the Archive Folder (SafeHaven).

At last, it kills the suspicious processes.

$query = '<QueryList><Query Id="0" Path="Microsoft-Windows-Sysmon/Operational"><Select Path="Microsoft-Windows-Sysmon/Operational">*[System[(EventID=11 or EventID=23) and TimeCreated[timediff(@SystemTime) <= 300000]]] and *[EventData[Data[@Name="TargetFilename"] and Data="C:\Users\WinForTest\Ransomware\honeyfile.txt"]]</Select></Query></QueryList>';

Get-WinEvent -FilterXML $($query)| ForEach {$eventXML = [xml]$_.toxml(); $info=$eventXML.Event.EventData.Data; $info| where-object Name -like "ProcessId"} | ForEach {$_."#text"} | Select -Unique | ForEach {C:\Users\WinForTest\Downloads\Procdump\procdump64.exe $_ -accepteula -mp "C:\SafeHaven\"; Stop-Process -Id $_ -Force;};

9. DeletedFileArchive scheduled task

This is the last scheduled task we need to finish our automation. This task is going to be enabled (by another scheduled task) when the honeytoken file is touched. It is going to be executed once when it is enabled and then every time a file is deleted. This way we can securely backup every file as soon as it is deleted.

So again, the first part of the scheduled task creation is to define its trigger condition, which is any file deletion Sysmon event:

$class = cimclass MSFT_TaskEventTrigger root/Microsoft/Windows/TaskScheduler

$trigger = $class | New-CimInstance –ClientOnly

$trigger.Enabled = $true

$trigger.Subscription = '

<QueryList>

<Query Id="0" Path="Microsoft-Windows-Sysmon/Operational">

<Select Path="Microsoft-Windows-Sysmon/Operational">*[System[(EventID=23) and TimeCreated[timediff(@SystemTime) <= 300000]]]</Select>

</Query>

</QueryList> '

The next step is to upload the files to a remote server. When the files are downloaded (or at least an attempt was made) they are removed from the archive directory on the machine.

One can see that I used the remove-item command without arguments and with the –Force argument as well. The reason behind this that sometimes the files are not removed with –Force and sometimes they are not removed without it (I don’t know the reason), so I implemented both in my code.

$ActionParameters = @{

Execute = 'powershell.exe'

Argument = 'cd C:\SafeHaven;GCI -Force | ForEach-Object {scp -P 22 -i C:\Users\WinForTest\Documents\scripts\testlinux_key.pem -o StrictHostKeyChecking=no $_ winfortest@51.103.130.116:/home/winfortest/SafeHaven;remove-item $_ -Force -ErrorAction SilentlyContinue; remove-item $_ -Confirm:$false -ErrorAction SilentlyContinue;}'

}

And finally, I register the task and disable it, so it won’t be executed before we detect any ransomware on the system.

$Action = New-ScheduledTaskAction @ActionParameters

$Principal = New-ScheduledTaskPrincipal -UserId 'WinForTest\WinForTest' -LogonType ServiceAccount

$Settings = New-ScheduledTaskSettingsSet

$RegSchTaskParameters = @{

TaskName = 'DeletedFileArchive'

Description = 'Uploads the files to a server after they are deleted and archived'

TaskPath = '\Automation\'

Action = $Action

Principal = $Principal

Settings = $Settings

Trigger = $Trigger

}

Register-ScheduledTask @RegSchTaskParameters

Disable-ScheduledTask -TaskPath "Automation" -TaskName "DeletedFileArchive"

DEMO

I wanted to record a demo, but in the end I felt it was too boring as the video only contained processes running in the background (invisible) and then some files appeared on my backup server which I monitored. Thus, I won’t provide any demo here, I’m only going to explain my test.

I modified my Sysmon config so it triggered by any file creation in “honeyfolder”. I executed the ransomware on the system which encrypted everything in like 3-4 minutes including my honeyfile. I have specifically chosen a ransomware which I have already tested and I knew it will generate file deletion events. (If there is no file deletion event, then no files will be saved to the archive folder, so I was going to miss a big chunk of my code.) A lot of ransomware won’t create a file deletion even though, so the backup/save function won’t work each time, but you can still save the binary of the ransomware which would be removed otherwise.

After the ransomware had deleted my file my script was triggered. It created a memory dump of the script, killed the process and it started to backup the files to my remote server.

Unfortunately from these steps, only the file transfer to the server was visible on the recording.

Conclusion

Design considerations

-

I enabled my user to access the backup folder and I executed all my scheduled tasks as my own user. This is generally not a good behavior. Creating a backup user who only has access to this folder and who is the only one who can access this folder (beside SYSTEM) is a more secure way to handle this scenario. I also tried to use SYSTEM user first, but it wasn’t a working solution, because I couldn’t execute some of the commands as SYSTEM from a scheduled task (I got an error: The operator or administrator has refused the request )

-

The script will trigger if a ransomware directly deletes the honeyfile, or it creates a new one under the same name. This is rare considering most of the ransomware add a new extension to the filename. For my script I only needed the delete logging to be configured for every file on my machine. Thus, I have changed the file creation logging and in my final script I only logged file creation in the ransomware folder. With this change every logged file creation is immediately suspicious, so I did not have to make additional filtering. (This was in issue previously, as XPath couldn’t handle it.) After the change the script can trigger on honeyfile deletion or any file creation in the “honeyfolder.” If you monitor more folders for file creation, you have to choose another solution to solve this issue.

-

Powershell windows are not hidden. They are going to pop up into the face of the user when the schtasks trigger. This is a good fit for testing but has to be modified in a real life environment/scenario.

-

If you can’t remove a file but you have read permissions, the script will upload it to the server every time the backup scheduled task is triggered. SCP won’t check whether the file exists or not so the given file will be uploaded repeatedly.

-

Every time you open a powershell session, it creates 2 ps1 files and then removes them after execution. This means every time the backup script finishes, it is going to be closed, the mentioned files will be removed, and since these are deleted files, they will trigger the backup script again. This can be prevented by excluding these specific ps1 scripts in the Sysmon config file for file deletion.

-

Instead of creating one big script which could setup everything, I went with a lot of smaller files and placeholder strings in the code (which can be replaced by using sed). Well, this is just a PoC and this was the easiest way to handle the code, so I created the scriptlets this way. Anybody can modify it and create 1 big script with parameters if needed.

-

During file backup I’m also not checking whether the files were forwarded to the remote server successfully or not.

-

Some ransomwares are not going to trigger my rules. My script only triggers if a specific file is created or deleted according to Sysmon. It is possible for a ransomware to only modify the file content which means it will never trigger my scheduled task.

-

I decided to use only Microsoft-signed tools. The reason behind this is that some of the security solutions can block execution based on signer in case of an incident. For example, Microsoft Defender ATP has a function called “Restricted App Execution” which is only going to let MS-signed binaries to run on the system. Even under this restriction my script is not going to be blocked so the backup function can continue to work.

-

Using schtask to automate some actions is really a pain in the ass. Most of the tricks I wanted to use I had to drop because of the limited filtering capabilities in scheduled task. There are other automation solutions on Windows, so I recommend everybody to look around and try out some other stuff first.

If you tried to handle malware infections or ransomware attacks, then you possibly already know that quick reaction and short incident response time is critical in this situation. Manual response is frequently not enough. Therefore, SOAR systems are deployed in a modern SOC. However, even a SOAR can be slow in reaction for some specific actions, especially when the alert triggering also takes some time. In this blog post I have shown a quick method of acting on a ransomware infection to be able to save your data. While the method was only created for showcase purposes and it is not a professional tool, it can give someone an idea of how or why to implement a quick incident response solution.

The script was created by me as a PoC because I wanted to test the possibilities of some basic process handling from scheduled task. I was also interested in how much load the Sysmon event 23 puts onto my machines. During my test, I recognized the benefits of this solution and found out how useful this prompt act can help in further investigation. The code is now available on github and I hope it will give some idea for further testing to somebody. You can easily modify the necessary variables via sed (or any replace function) and then you can use the code to deploy the solution. You can find a more detailed manual on github.