This post is to show you a practical implementation of a prototype honeytoken which is based on Remote Template Injection and Azure Function App. There are lots of honeytoken solutions on the market. You can find free options as well as expensive commercial services out there. A lot of them also give you a nice way to integrate them into your SIEM while others lack this capability. Which one you choose is really up to your needs. However, sometimes, you need an open-source and cheap solution that is easy to deploy and use - let it be for testing or production purposes. For a solution like this take a look at my article below.

A few years back I was working a lot with various deception technologies, so I got familiar with honeytokens and honeydocs (document-based honeytokens). Even my BSc thesis was about honeytoken-based document tracking, so I definitely had an interest in the topic.

Later on, I was starting to get familiar with Azure and Sentinel just out of curiosity. But that was also the time when one of my employers decided to move to Azure. And they also wanted to test out some deception technologies. These two things together incentivized me to create a simple honeydoc solution as a proof of concept to show the benefits of the deception technologies to my company. Due to the project being a PoC the budget was limited, so I could only use free (or at least cheap) services.

The honeytoken solution in the blog post is based on the same ideas and principles, so you will find an implementation here that is simple and low-cost.

Requirements

The guidelines and recommendations I had to follow:

- Open-source/Azure-based: Since we were moving to Azure, I could only use open-source or Azure-based resources for my PoC. (So, no commercial tools besides Azure resources).

- Scalable: Needed a scalable solution, because the company was pretty big.

- Automation: Eliminate as much manual work as possible. Automation was also required for scalability.

- Identifiable usersL I had to be able to tell from which user’s machine the honeydoc was stolen/opened. This requirement was needed to be able to identify the compromised machine/user.

Because it was just a POC I did not have to create a full-fetched solution. Thus, even in this post, I won’t provide a complete solution. I am simply going to introduce the basics of the idea.

Architecture

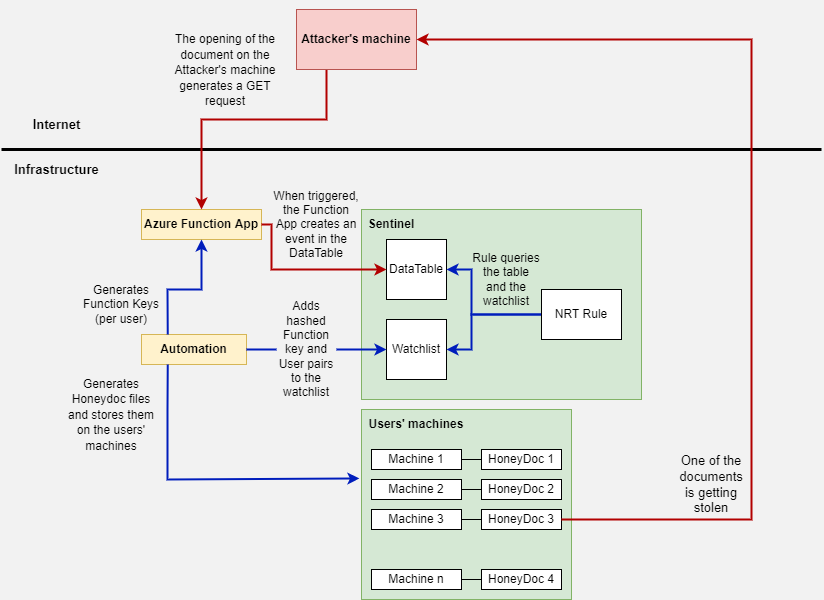

The solution is based on three different blocks:

- The first one is the honeydoc itself. This is, in my case, a docx file. The docx file reports back to a web server by using Remote Template Injection when it is opened.

- An Azure Function App written in Powershell is the server part of the code that waits for a honeydoc to reach out. When it gets a GET request from a honeydoc it forwards these events to a Log Analytics Workspace in Azure.

- The last part of the architecture is a rule in Sentinel that detects the events coming from Azure Function App to Log Analytics Workspace. (Sentinel is built on top of a Log Analytics Workspace).

Besides the core blocks, I also utilize an automation logic that helps creating keys in Function App and synchronizes this data with a Watchlist to be able to differentiate and identify the various users and computers. The same automation steps are required to revoke keys and honeydocs once they are compromised. This step is not core functionality, the solution works even without this, but it is needed to eliminate some manual work and to be able to differentiate between the users.

Design of the solution:

The actions marked with the red arrows are triggered when somebody opens the document. The image presents the scenario when a stolen document is opened on the machine of the attacker who stole the file.

The actions on the blue arrows are the business as usual activities. These actions are carried out to set up and monitor the environment.

The revocation and regeneration of the ‘compromised’ keys and documents are also part of the business as usual activities but they are not shown on the diagram.

1. Remote Template Injection

As I mentioned, the honeydoc solution is based on remote template injection. Once an office document is created based on a template, the path to the template is saved in the document. You can change this path to anything, even to an online link by modifying the document at code-level. If you open the file, Office tries to reach out to this path. After the modification, Office will try to access the online link. This download attempt is the beacon to detect the opening of the document. The webserver Office reaches out to is managed by us, so it is easy to detect a download attempt.

In case of a honeydoc this file opening is always suspicious. The beacon is created by Office itself, so if the file is opened in a text/hex editor or other solutions then we won’t see a download attempt.

To find more details about remote template injection, how it works, and how to create a file like this please see my blog post about the topic here: Remote Template Injection.

The link to be used as the template path will be the URL of the Azure Function App we are going to create later on. See the details in the next section.

When I first created a blog post about Remote Template Injection I also tested the method with various email security solutions. None of the email security tools marked it as suspicious or identified the file as a Remote Template Injected document back then. In the last 3 months, I had the chance to test some of the old ones again and a few new email sec solutions too - and this time a lot of them recognized the technique or straightaway blocked my email.

I used Remote Template Injection, because it is well-known, works on any OS (if the document is opened by Office) and is easy to utilize. Since it is nothing but a technique it is also free to use, meaning I did not have to buy any commercial honeytoken service.

2. Azure Function App to detect the opening

The Function App has 3 jobs to do. It listens on the HTTP(s) port and processes every request (1). Each request is a potential honeydoc file opening. The same function is responsible for parsing the request and processing its content (2). And then it forwards the created event to a log analytics workspace (3) where we can detect it by a Sentinel Analytics rule.

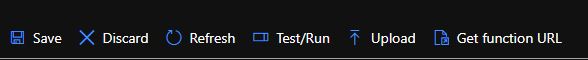

So, the first step is to create a Function App with PowerShell Core runtime. If you want to use your own code, you can use any other runtime as well of course. Once it is done, create a Function with HTTP Trigger.

When you use Function App, it provides you a URL where the code is available. You can get this URL by clicking on the ‘Get function URL’ button from the ‘Code + Test’ tab. This is the URL we need to add to our Remote Template Injected document.

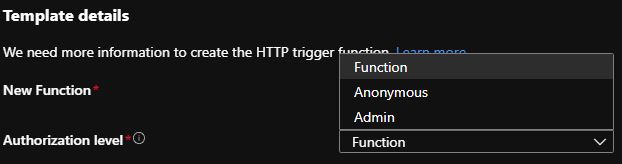

The Function App also gives you some security choices. When you choose the HTTP Trigger option, you can also pick an Authorization Level. Two relevant ones for us are the Function and the Anonymous Authorization Level. The first one lets us process an API key before processing the HTTP request. Thus, somebody without a key won’t be able to use our Function App. The latter one allows anybody to trigger our Function App just by having its URL.

But why is it important to us? As I said, one of the requirements was to be able to tell from which user’s machine the document is coming from while keeping the solution scalable. One thing that heavily limits the capabilities here is the Remote Template Injection. When we use this method, it is Office that triggers the Get request. Thus, its format is fix, there is no option to make it a POST request or to send additional data (something unique per user) in the body. Since the only option is a GET request, a unique identifier can only be forwarded in the URL query. The issue is, we can only put one variable into the query because variables are separated by an ‘&’ character and Office fails to open the Word document if an ‘&’ character is found in the code (in this specific field. ). (At least I could not find a way yet to circumvent this.) Thus, we can’t use ‘&’ and therefore we cannot add more than one field.

Lastly, an API key needs to be forwarded to be able to reach the function. This defensive mechanism is needed so people without the key can’t trigger the function. In a GET request the only way to do this is to forward the key in the query in the ‘code’ variable like this: https://testcode-honeydoc.azurewebsites.net/api/HttpTrigger1?code=xxxxAPIKEYxxxx .

So, we have to use a GET request. We can only use one variable in the query. And that one variable has to be the API key in case of Function Authorization Level.

So, our choices are:

- Anonymous Auth level: In this case, no API key is needed so we can use the only variable in the GET query for something else. The benefit is that we can add anything to our query and parse it by our code. The drawback is that anybody can trigger our Function App. Even though we can filter out random scans and similar activities, this will be done by our code, which means we have to pay for these executions. Thus, somebody knowing the URL of the App can intentionally generate a huge amount of cost for us.

- Function Auth level: In this case, an API key needs to be provided in the GET request. We can create a unique API key for each user so we can still identify them properly. The benefit is that people without the key won’t be able to trigger the function app. The drawback is that we won’t be able to add additional info to the query. Therefore, to be able to identify the user whose machine stored the opened document, we need to have a different Function key for each user. Then, we have to store the API key-user correlation somewhere, and to remain scalable, we must automatically generate these keys and store the correlations. This involves some additional automation. (The per-user API keys setup is not necessarily a problem. This way when one machine/user is compromised we don’t have to recreate and redeploy the honeydoc for all of the users. We only have to change the key and the document of the compromised user.)

During my POC, I used the second option because of its benefits over the Anonymous Authentication Level.

According to my last discussion with MS we can generate any amounts of keys per Function App, so we can cover any number of users. They were not sure how scalable the solution would be with tons of keys though. But since we don’t expect our Function App to be triggered frequently this should not be an issue.

Storing API keys in a document itself and sending it via a GET query is usually not safe and should be avoided. In this case, however, this is not a problem. The malignant behavior is detected by the usage of the API key as this information should not be known by legitimate actors. Usage of the API key means somebody had access to the honey document which should not ever be accessed by anybody. After the API key is used the user/machine/document/key should be considered compromised. After remediation, the key should be removed from the Function App and a new key and decoy document should be created for the ‘compromised’ user and machine.

The code itself is fairly simple because Microsoft provides you a nice skeleton to be used when sending data to Sentinel. The first part of the code is to communicate with Sentinel. Because it is straightforward, and you can find it on Microsoft’s page, I won’t add too much comment:

|

|

Then the code parses the incoming request to utilize the useful information. By default, when Office opens a Remote Template Injected file it sends some good info. I am parsing out the fields I need, but feel free to take a look at some raw logs and pick what you need:

|

|

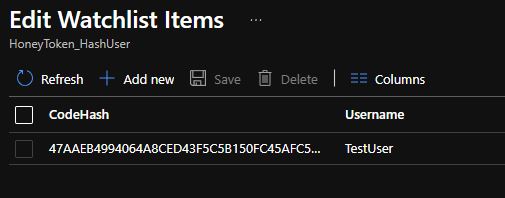

I parsed everything I needed, built-up an object, and then transformed it into a JSON structured entity. As you can also see instead of adding the API key to the object, I only added the hash of the API key. To identify the user/machine we have to store the API key or its hash together with a machine name or username. Storing the API key in clear text would be bad because that way it can be leaked easily thus creating FPs (leaking the key but not the honeydoc is an FP in this case). So, instead of that, I only store the hash of the API key in Sentinel and then forward the hashed value from the request.

In the last part of the code, I define the workspace id, the primary key, the name of the table to push the data into, define the TimeGenerated value and call the function that pushes the data into Sentinel (Log Analytics Workspace).

Also, if the code execution is successful, I return with HTTP Status Code 200. But this part is not important for our honeydoc to work.

|

|

Find the Powershell code on gitlab.

You can find some Write-Host commands in the code. They are simply there for troubleshooting purposes, feel free to remove them.

3. Sentinel

Sentinel being a SIEM covers the real detection and alert creation part of the scenario. The Function App forwards the detection events into the HoneyDoc_Detectionv2_CL table (for me), so we simply have to create a rule to trigger when a new event is pushed into this table.

Sentinel also has a new type of rule called Near Real-Time rule. These rules were designed to trigger on simple activities with low latency and to cover situations that can be detected by a single event. We can utilize NRT rules because we need a detection that triggers when a single event arrives to the HoneyDoc_Detectionv2_CL table.

So, the query for our NRT rule can look like this:

|

|

This is literally all we need. Additional entity parsing can be done in the rule if needed.

However, we have a further requirement. Namely, to identify from which user’s machine the document is coming from. For this, the API key hash–username pairs need to be stored in a Watchlist and we have to correlate them with the incoming data in the table.

This is my Watchlist (HoneyToken_HashUser) with 1 entry:

So we can modify the code to enrich the alert with the real username:

|

|

And this is the output (only a few fields are shown):

During this scenario ‘user’ and ‘machine’ can be used interchangeably. Each user has one machine and each machine is owned by only one user.

4: The automation logic

Because I only created my solution as a PoC, I did not write the automation part of the code. This is needed to make the honeytoken detection scalable.

The automation logic has to do the following things:

- Create unique Function keys for each user and store them in the Function app. Two resources to help you with this task: https://markheath.net/post/managing-azure-function-keys and https://github.com/Azure/azure-functions-host/wiki/Key-management-API . This step is only needed if you want to use Function Authorization Level and differentiate the users based on the API key.

- Store the hashed API keys in a Sentinel Watchlist. A resource to help you with this https://medium.com/codex/using-infrastructure-as-code-to-deploy-azure-sentinel-watchlists-to-lower-ip-false-positives-e78e3adba9f3 . You can also use the Sentinel Playbook Watchlist connector.

- Create unique honeydocs for each user and store them on their machines. For the creation part, you can use the script I created in the past: version 1 or version 2. Putting it onto the machine can be done with various endpoint management solutions, for example with GPO. Use the method you use normally for similar tasks.

- Additionally revoke ‘compromised’ documents and keys and deploy replacements for them.

Sum

The basis of the solution was created by me in the past as a Proof of Concept. Therefore, this is more of a thought-provoking solution than a fine-grained one. Still, the mentioned techniques and the code itself can be a good starting point if you want to create a cheap honeytoken solution for yourself.