Archiving is a fairly new feature in Sentinel that was introduced to help you decrease the cost of your long-term data retention for events that are not used or only rarely used. Previously, you either retained your data outside of Sentinel or had to pay the costly retention fees, but now you can keep your data in the Log Analytics workspace for a lesser cost thanks to Archiving. However, there is a problem with this solution. “The archive feature… can only be set at the table level, not the workspace level,” according to Microsoft. To address the issues caused by this flaw, I prepared a script and this article.

Find more info about archiving here.

In various scenarios, you can expect frequent table creation in Sentinel. It can be time-consuming to configure all the tables each time, especially if they are created automatically. This is why normal retention configuration at the workspace level is really convenient. If a new table is introduced but not individually configured, it will use workspace-level retention.

For archiving, there is no workspace-level configuration capability. You must define the archiving period for each table individually. This means you have to configure it for each new table after its creation, which is a never-ending task.

A typical situation in which tables are created constantly is when offline logs are uploaded to Sentinel for a specific investigation. The SOC is looking into something and requires logs from a machine that is not onboarded to Sentinel. In this case, the log can be extracted from the machine and sent to Sentinel via some automation. To aid in the investigation and log separation, events like this can be uploaded to a new table with a name like: <incident_id>_<log_type>_CL. For example, 12345_WinSecurity_CL.

This is a real-life scenario that I have utilized before, but with more complex automation rather than just picking up logs.

So, my Function App is going to help you in the following scenarios:

- In Sentinel, configure the archiving period for new tables. Every table that is not excluded will be configured based on the specified archiving period value.

- You can also define tables with regex expressions to be automatically configured to use a different archiving period than the default one you set for the other tables. As a result, you can use a naming convention in your table names and define unique archiving for those tables based on the pattern in the name. Alternatively, a regex expression can be used to exclude tables from archiving.

The script was not created to configure all the existing tables. It is going to time out before it can go through all the tables. If you still want to configure every table, you must execute the Function App multiple times.

The code

I’ll explain the four separate parts of the code in the next four sub-sections so you can easily grasp what’s going on and adjust the code for yourself.

Find the code on GitLab. Please keep in mind that the code on GitLab may differ from the code in this post as I tend to enhance my scripts over time.

1. Definitions

The first part of the code is to define variables to be used later.

param($Timer)

# parameters

$SubscriptionId = $env:subscriptionId

$LogAnalyticsResourceGroup = $env:rgName

$LogAnalyticsWorkspaceName = $env:workspaceName

$TenantID = $env:tenantId

$TotalRetentionInDays = $env:totalRetentionInDays

try{

$ExcludedTables = $env:excludedTables.Split(',')

$UniqueTables = $env:uniqueTables -replace ",","`r`n" | ConvertFrom-StringData

}

catch{

Write-Warning "Incorrect values have been provided for the excludedTables or uniqueTables env variables"

exit

} Most of the variables are pretty talkative here. You can save the values in environment variables when you create your Function App, but you can also just modify the code itself.

The ‘$TotalRetentionInDays’ variable specifies how long you want to keep the data alltogether. This value is the sum of the retention and the archive periods. As an example, if you set the $TotalRetentionInDays value to 120 days and the retention period is already 90 days (free days in Sentinel), the archiving period will be 30 days.

$ExcludedTables: A list of tables that you do not want to configure can be stored in this variable. An environment variable can contain a list of comma-separated tables.

$UniqueTables: In this variable, you can specify regex codes to match with table names as well as the number of days you want to keep the data in the given table. For example, the “^Zoom_=100,LimaCharlie=111” value (without quotes) can be used to configure all the tables starting with “Zoom_” to be kept for 100 days and all the tables with “LimaCharlie” in their name to be kept for 111 days.

As of now, there is no ARM template to be used to deploy this Function App.

Please be aware that the initial release of my code used wildcards, whereas the new one uses regular expressions.

2. Authentication

In my code, as the first line indicates, I use Managed Identity. When the Function App is complete, enable the Managed Identity and grant the Log Analytics contributor permission to it. To configure the archiving of the Log Analytics workspace, you must be a Log Analytics contributor.

Connect-AzAccount -Identity

Set-AzContext -SubscriptionID $SubscriptionId 3. Gathering all the tables for the defined Log Analytics workspace

First, we are putting together the query that is going to be sent to Azure to retrieve all the tables.

$AzureAccessToken = (Get-AzAccessToken).Token

$LaAPIHeaders = New-Object "System.Collections.Generic.Dictionary[[String],[String]]"

$LaAPIHeaders.Add("Content-Type", "application/json")

$LaAPIHeaders.Add("Authorization", "Bearer $AzureAccessToken")

$TablesApi = "https://management.azure.com/subscriptions/$SubscriptionId/resourcegroups/$LogAnalyticsResourceGroup/providers/Microsoft.OperationalInsights/workspaces/$LogAnalyticsWorkspaceName/tables" + "?api-version=2021-12-01-preview" And then we can actually send a request to Azure to provide us the necessary information.

$TablesApiResult = Invoke-RestMethod -Uri $TablesApi -Method "GET" -Headers $LaAPIHeaders -MaximumRetryCount 3 -RetryIntervalSec 12 The $TablesApiResult contains all the information of all the table that we need to properly configure archiving.

4. Filtering and configuration

In the main ‘foreach’ loop, I go through all the tables and determine whether or not the given table is excluded. If a table is in the $ExcludedTables variable, it will be ignored whether or not it is in the UniqueTables list.

foreach($regex_entry in $ExcludedTables)

{

if($ta.name.Trim() -match $regex_entry)

{

$isExcluded = $true

break

}

}This script only configures ‘Analytics’ tables and not the ‘Basic’ ones, and it only touches tables that are not excluded (identified in the previous step).

foreach ($ta in $TablesApiResult.value){

if ($ta.properties.Plan -eq 'Analytics' -And !$isExcluded){...}

} Inside the ‘if’ condition, if a table is in the $UniqueTables variable then the code saves the unique number of days as $Total. If it is not on the list, then the default configured value is going to be used as $Total. The variable $Total stores the TotalRetentionInDays value for the processed table.

foreach ($key in $UniqueTables.Keys) {

if ($ta.name.Trim() -like $key)

{

$Total = [int]($UniqueTables[$key])

}

}

if ($Total -eq $null)

{

$Total = [int]($TotalRetentionInDays)

}The rest of the code simply constructs an HTTP request and sends it to Azure’s REST API to configure the table (one at a time).

if([int]$ta.properties.totalRetentionInDays -ne [int]$Total )

{

$TablesApi = "https://management.azure.com/subscriptions/$SubscriptionId/resourcegroups/$LogAnalyticsResourceGroup/providers/Microsoft.OperationalInsights/workspaces/$LogAnalyticsWorkspaceName/tables/$($ta.name.Trim())" + "?api-version=2021-12-01-preview"

$TablesApiBody = "{'properties': {'retentionInDays': null,'totalRetentionInDays':$($Total)}}"

try {

$TablesApiResult = Invoke-RestMethod -Uri $TablesApi -Method "PUT" -Headers $LaAPIHeaders -Body $TablesApiBody -MaximumRetryCount 3 -RetryIntervalSec 5

Write-Information "$($ta.name.Trim()) DONE - TotalRetentionInDays: $($Total) days"

}

catch {

Write-Warning -Message "Update-TablesRetention $($_) for table: $($ta.name.Trim())"

}

} Find the full code on GitLab.

Configuration

Steps to create and configure the Function App:

- Make a Powershell Function App.

- Create a new time-triggered function and paste my code into it. Set it to run once per day or once per week. We don’t have to do it all that often.

- Enable Managed Identity for the Function App.

- Grant Log Analytics Contributor access to the Managed Identity.

- Define all of the environment variables in the code.

- $env:subscriptionId: The subscription of the Log Analytics workspace (LAW) you want to configure.

- $env:rgName: Resource Group name of the Log Analytics workspace.

- $env:workspaceName: Name of the LAW.

- $env:tenantId: trivial

- $env:totalRetentionInDays: The number of days you want to keep the logs for. This is the total of the retention and archiving days. Thus, if your retention is set to 90 days and you want the data to be kept in archive for 30 days, you must set totalRetentionInDays to 120 days. If you enter a number that is less than the retention days, you will receive a warning and no table configuration will be done.

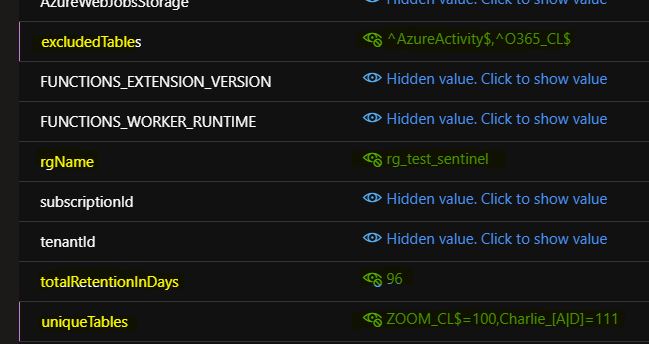

- $env:excludedTables: A list of tables that should be excluded from the configuration.

- $env:uniqueTables: A list of regex and number pairs corresponding to specific tables and their totalRetentionInDays value.

- In the function.json file, configure the timing of the Function App. it is enough to run the code once per retention period. So, if your retention period is 60 days, running this code once every 60 days is sufficient. No logs (Analytics events) are going to be dropped before 60 days. But to be on the safe side, I like to execute this code once or twice a week, just to balance out accidental misconfigurations and errors in the execution.

Sample configuration (some values are hidden):

With this setting, I tell the Function App to keep the AzureActivity and O365_CL tables intact, configure all the tables ending with “ZOOM_CL” to be kept for 100 days, containing “Charlie_A” or “Charlie_D” to be kept for 111 days, and the total retention for every other table to be 96 days.

And the magic

After deployment, the Function App searches for and configures all tables that are not excluded. This means that if you create a new table in your workspace, you won’t have to worry about losing that data after the retention period due to a lack of archiving. From this point forward, the archiving configuration is done automatically for all new (non-excluded) tables. This is especially useful if you create a lot of tables, or if you have people on your team who can trigger table creation (via some automation) but do not have the permission to configure the archiving for those tables.

As I stated previously, this code is for archiving and not for retention configuration. So, if you enter a totalRetentionInDays value lower than the current retention for the table, then no changes will be made to that table.However, based on my code, writing a script that can configure both retention and archiving is not difficult.