DCRs and their ingestion-time transformations have been around for quite a while. They are commonly used in modern Sentinel deployments, but I’ve utilized several specific configurations that are particularly useful during SIEM onboarding, migration, and troubleshooting scenarios.

The primary focus of this article is to showcase the versatility of DCRs, demonstrating how they can simplify your tasks and enhance the robustness of your deployments.

Scenarios:

The following strategies are ones I’ve utilized during past SIEM migrations to manage costs effectively, verify incoming data, and detect potential misconfigurations.

1. Stopping the traffic flow

When moving from another SIEM system to Microsoft Sentinel, you need to onboard log sources into Sentinel while still sending logs to the original system. Since the migration process takes time, logs will be stored twice – once in both systems during this interim period.

To avoid duplicates and keep the cost low, you can temporarily disable logging in the new system once successful onboarding is confirmed. This is feasible if you can quickly disable and re-enable logging without significant changes to any systems. Microsoft Sentinel provides this flexibility, so you can effectively manage the transition without storing duplicates while the old SIEM is still in use. Once the new system is ready to go live, you can re-enable logging in Sentinel seamlessly.

An effective and centrally manageable way to achieve this is by setting up an ingestion-time transformation to filter out all incoming logs:

- First, confirm that the log onboarding is successful.

- Next, create an ingestion-time transformation to discard all incoming logs using this simple filter:

Source | where false - When you need the logs again, simply remove the transformation.

SIEM migration:

- During a migration, you can use this method to verify that the data is being received and is in the correct format before temporarily disabling logging to save costs.

2. Sampling

When bringing a new log source into Microsoft Sentinel, you might encounter uncertainties regarding the final cost, log format accuracy, and connection reliability. To prevent an immediate high cost by forwarding all logs to your SIEM right away, you can use sampling, which involves sending only a portion of the logs.

Here are some scenarios where sampling can be beneficial:

- Cost estimation: If you’re unsure about the cost of a log source, forwarding only 1% of the events (sampling) can help you estimate the final cost without overspending. This approach is useful in any SIEM implementation.

- Migration to a new tool: During the migration from one tool to another, you might want to avoid significant log duplication. Instead of stopping traffic (as previously mentioned), you can enable sampling. Sampling allows a subset of the logs to be forwarded, enabling continuous verification of the connection and actively working with the data, all while keeping costs manageable.

- Log Validation: If you need sample logs to verify format and value before fully enabling or disabling logging, sampling can provide the necessary insights.

Let’s say we want to forward 1% of the logs only while dropping 99% of them. Ingestion-time transformation processes events individually without maintaining state information, making it unable to forward exactly 1% of the data. However, you can implement a sampling code that forwards logs with a 1% probability. Although the random operator isn’t allowed in ingestion-time transformations, a workaround involves using a part of the TimeGenerated field (e.g the microsecond part) and allowing logs through if that part is divisible by 100. Assuming logs are evenly distributed over time, this method forwards 1% of logs (it forwards every log with a 1% chance):

source | where datetime_part('microsecond',TimeGenerated) %100 < 1 Ensure your events are evenly distributed over time and have precise timestamps for this method to work effectively – e.g. confirm you have timestamps precise to the microsecond.

In the CommonSecurityLog table, where logs from multiple vendors might be present, not all vendors generate the same volume of logs. If you want to retain all logs from VendorA, 10% from VendorB, and 1% from VendorC, you can use a transformation like this:

source | where DeviceVendor == "VendorA"

or (DeviceVendor == "VendorB" and datetime_part('microsecond', TimeGenerated) % 10 < 1)

or (DeviceVendor == "VendorC" and datetime_part('microsecond', TimeGenerated) % 100 < 1)I often employ this sampling strategy before activating logging for tables like AADNonInteractiveUserSigninLogs, Syslog, CommonSecurityLog, SecurityEvent, and Event. This helps manage costs for clients initially, before any tuning and cost/value optimization.

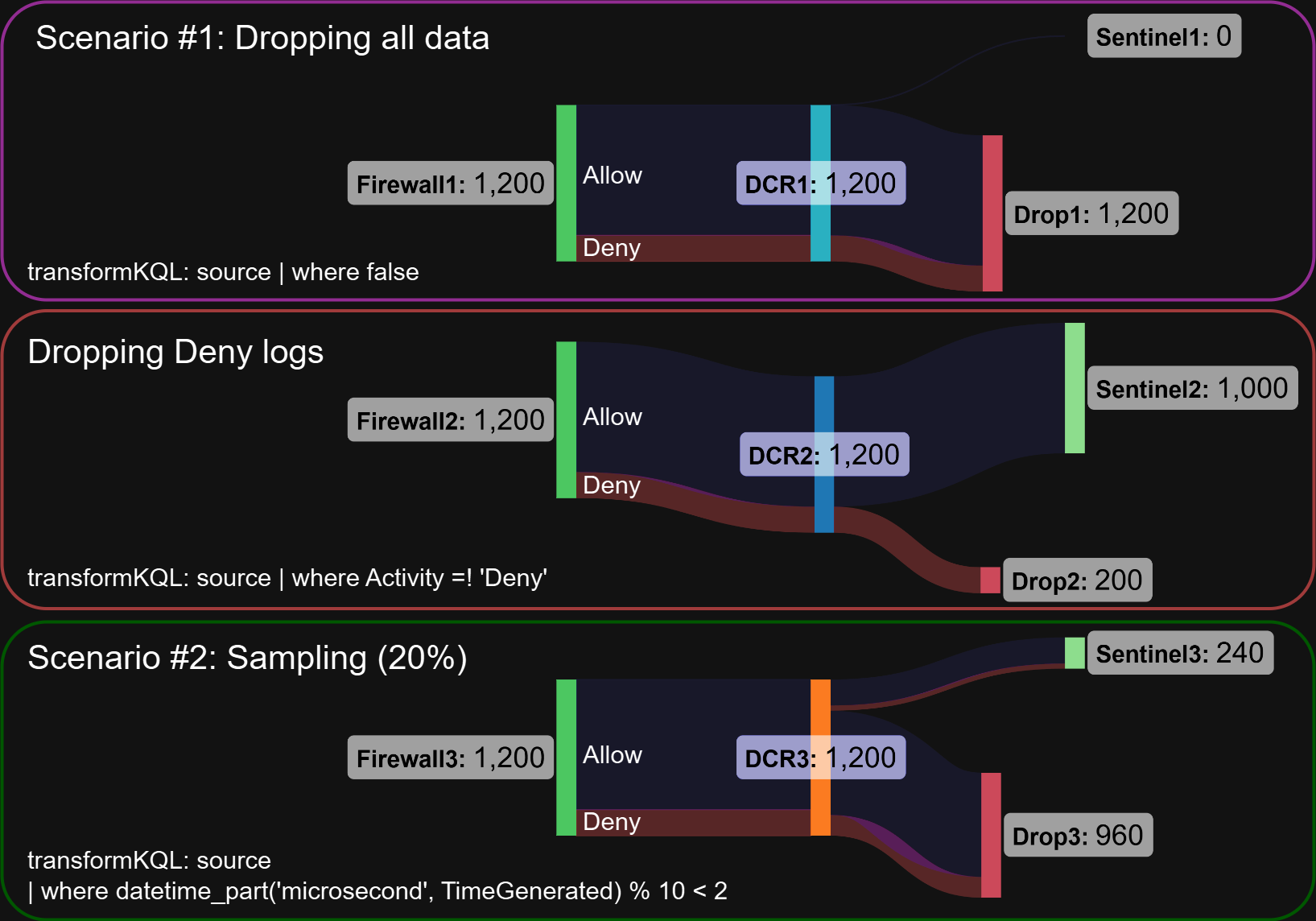

This diagram illustrates the differences between a complete stop, activity-based filtering, and sampling, using 1000 Allow and 200 Deny logs from a firewall as an example. Keep in mind that sampling does not result in precisely 20% of the logs.

This diagram illustrates the differences between a complete stop, activity-based filtering, and sampling, using 1000 Allow and 200 Deny logs from a firewall as an example. Keep in mind that sampling does not result in precisely 20% of the logs.

Due to a recent change, Microsoft no longer allows the creation of ingestion-time transformations for empty tables via the GUI. Thus, you now need to create the DCR and/or the transformKQL code outside the GUI, such as through ARM templates.

SIEM migration:

- This approach can be applied similarly to the previous recommendation (stopping traffic), allowing some data to continue flowing in and continuously confirming the data connector connection.

- It also serves as a cheap way to estimate the cost of full data for generally expensive tables.

3. Marking the logs before drop

One of the most significant improvements with DCRs (Data Collection Rules) compared to the pre-DCR era is the ability to filter out certain logs and saving costs. Using the transformKQL option to drop specific data can be quite powerful.

However, it’s crucial to verify which logs you’re removing to ensure only unwanted logs are discarded. It’s easy to write a transformKQL query that’s too broad, inadvertently dropping too many logs.

If you’ve ever used DCR filtering, you’re likely aware that it doesn’t always work as expected. Functions that work in one environment might not in another, and not using type casting can lead to seemingly random log drops—issues I’ve encountered since this feature was introduced.

A Better Approach: Tagging the Logs First

It can be beneficial to first tag logs with your DCR and confirm which ones would be dropped before fully committing to the drop. For example, you can tag your firewall “Allow” logs like this:

source

| extend drop = iif(DeviceProduct == "Firewall" and Action == "Allow", 1, 0) After confirming, filter based on the ‘drop’ attribute:

source

| extend drop = iif(DeviceProduct == "Firewall" and Action == "Allow", 1, 0)

| where not (drop == 1)Or, if you prefer not to keep the ‘drop’ metadata field, adjust the query:

source

| where not (DeviceProduct == "Firewall" and Action == "Allow")Advantages of This Method:

- Prepare for the delay of Transformations: Ingestion-time transformations can take time to apply. Incorrect code could drop important logs for hours before you can correct it due to this delay.

- Client Communication without commitment: Tagging logs before dropping them can help in client communication by showing what would be removed without making immediate changes (with consequences)

Given the potentially severe impact of accidentally dropping logs, I strongly recommend tagging events first before proceeding with the filtering. This becomes particularly important in live production environments.

SIEM migration:

- This method helps prevent the unintentional loss of important data. In a previous SIEM migration, I discovered that the client was unaware they were dropping critical logs due to an incorrect statement. Using this method, I was able to demonstrate the potential data loss before any logs were discarded without anyone’s knowledge.

- Allows the review of tagged logs before dropping them. Once logs are dropped, their content cannot be checked anymore.

4. Identifying the used DCR

Most environments use a simple and flat DCR setup. It is usually recommended not to overcomplicate your DCRs because a hierarchical design, where multiple DCRs are assigned to a single machine for collecting the same types of logs (e.g., different event ids all classified as Security events), can become unmanageable.

Determining which DCR and XPATH query captured the logs, or which ingestion-time transformation processed them is critical during health investigations. This is vital for understanding why a specific log is collected, why it isn’t filtered out, or why there are duplicates in your environment.

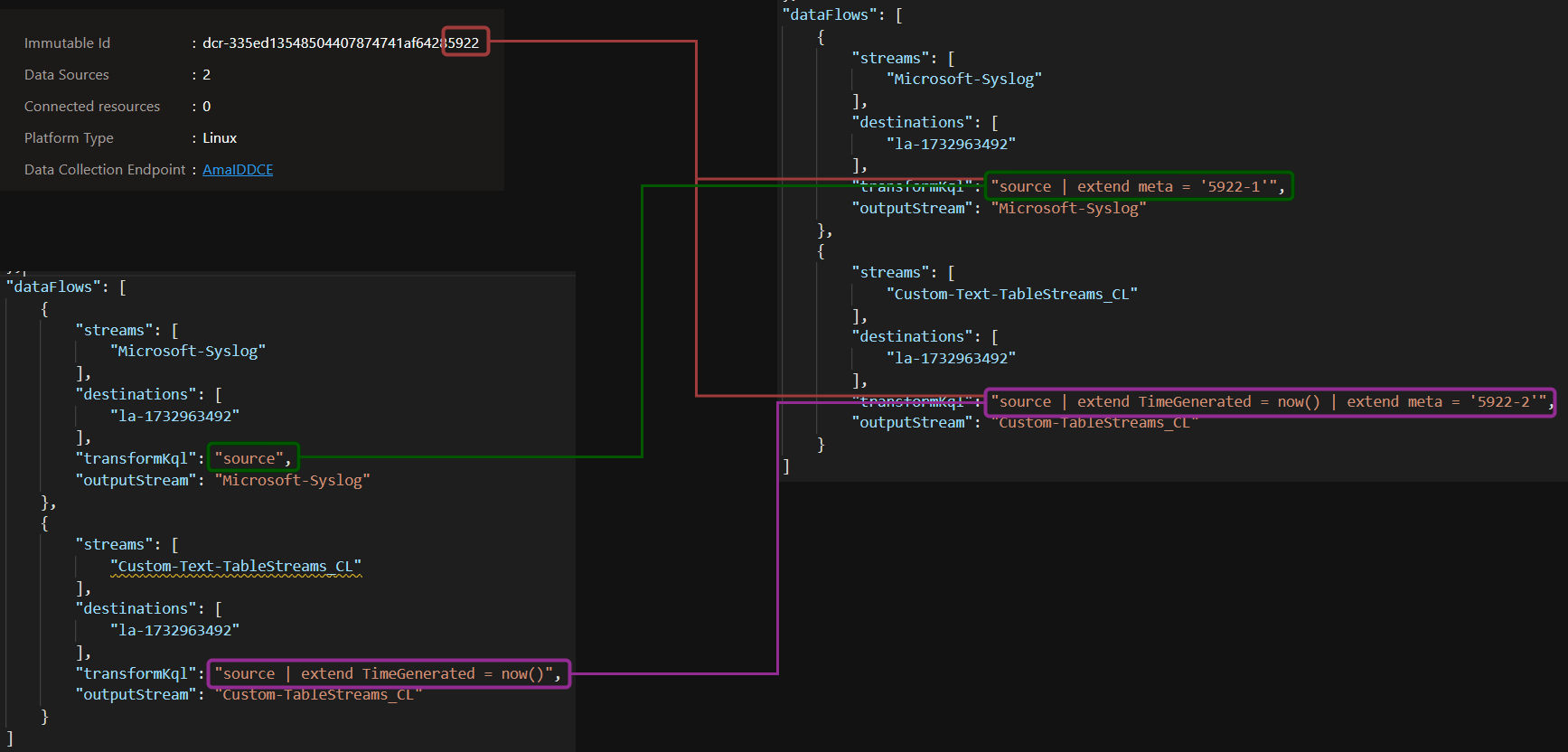

Although there are no automatic methods to add this data, you can create an ingestion-time transformation (or modify existing ones) to include this information. You can use the resource ID of the DCR and manually assign an identifier to the transformKQL (ingestion-time transformation) query to make it identifiable. To minimize data size, you might only use a small portion of the DCR ID, as long as it remains unique in your environment.

In this example, I use the last four digits of the immutable ID of the DCR, together with the number of the transformKQL in the given DCR, to create a ‘meta’ field:

This is not a tricky technique, rather just an enrichment setup I highly recommend for complex environments. It can be immensely beneficial for troubleshooting scenarios down the line.

SIEM migration:

- Understanding which DCR is being utilized during a migration can greatly assist in documenting the entire infrastructure and identifying misconfigured devices.

- It also aids in troubleshooting non-working connections or DCRs. When onboarding thousands of machines, some might not appear in Sentinel or might generate duplicate logs. Knowing which DCR handled processing which logs, or which DCR is failing to send data, is extremely beneficial.

Overall

Leveraging these configurations in past SIEM migration scenarios has significantly saved both time and money for me and my clients. While DCRs have various applications, it’s crucial to consider how they can support your specific use case.

During a SIEM migration, these functionalities are particularly valuable for ensuring a successful transition and achieving client satisfaction.

Additionally, these configurations can be enabled or disabled as needed; there’s no requirement to set them up in Sentinel from the start.