Azure Policies are an excellent tool for standardizing and scaling your environment within Azure. They can be used to configure log collection from Azure resources to Microsoft Sentinel. While you can manually set up log collection if your Azure environment is small, a growing cloud presence will necessitate a more scalable and standardized solution, such as an Azure Policy.

Built-on policies

Azure offers a wide range of default policies for log collection purposes. These policies can assess your resources, and if any are found non-compliant (log collection is not properly configured), they can also be used to configure those resources accordingly.

Azure does not yet offer built-in policies for all resources and the existing ones have drawbacks that make them unsuitable for many situations. In this article, I’ll guide you through the steps to enhance these built-in policies or create new ones.

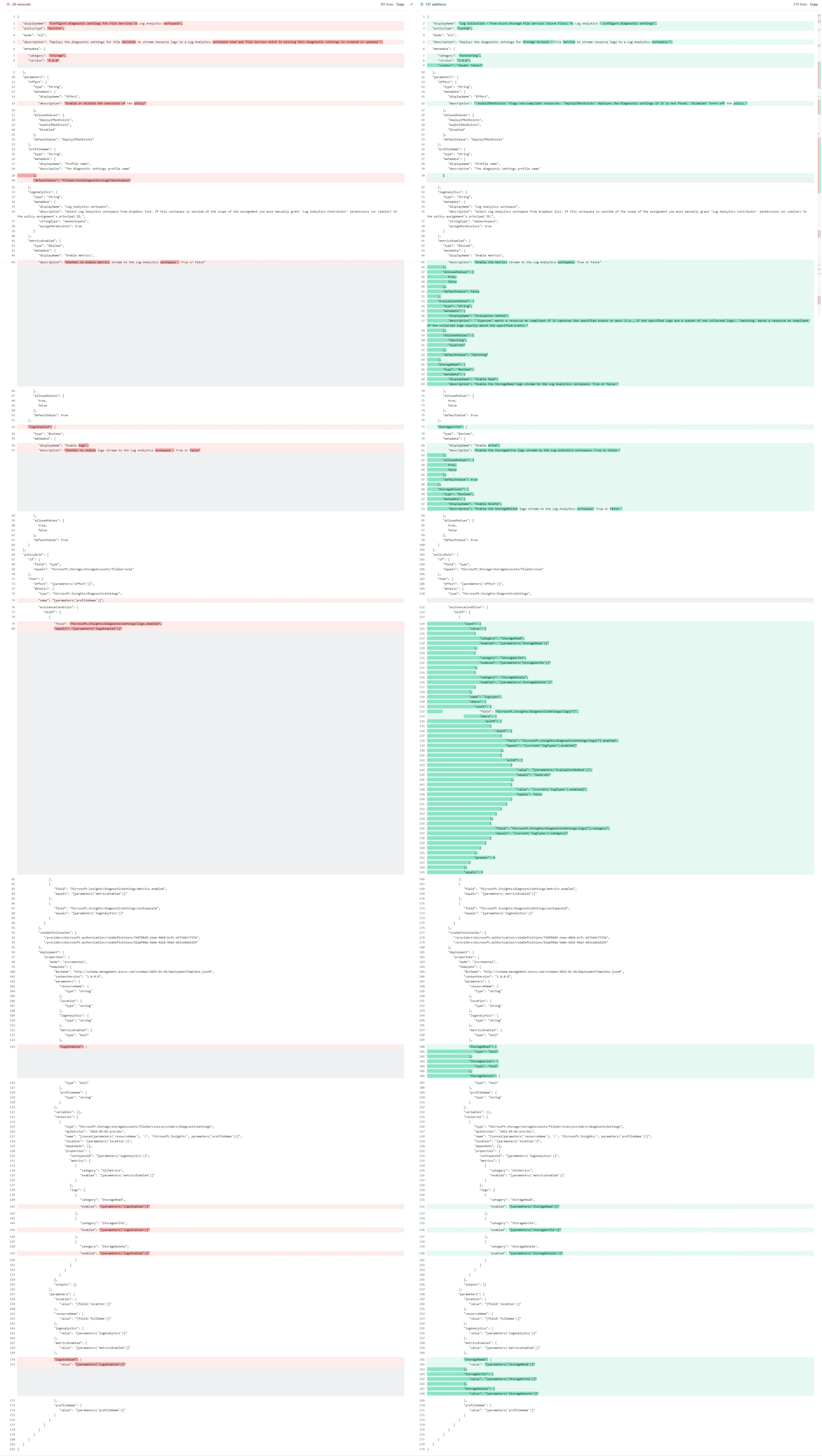

To illustrate how certain aspects of a Policy can be improved, we will use the built-in policy ‘Configure diagnostic settings for File Services to Log Analytics workspace’ (version 4.0.0).

Improvements

Properly assessing the compliance of a resource is crucial. Identifying non-compliant resources is important because these are the ones the policy will configure. Companies also tend to track non-compliant resources and remediate them whenever possible.

From a log collection perspective, a non-compliant resource is one that fails to forward the required logs to the appropriate destination in the expected format. But what exactly is non-compliant for you depends on your exact requirements.

In this article, I’ll highlight three aspects of built-in policies that can be modified and enhanced. These are:

- The ’name’ parameter in the policyRule.

- The ’logs.enabled’ configuration in the existenceCondition.

- Modifying the existenceCondition logic to create a multi-purpose policy.

While these changes will benefit most companies, there may be situations where using the default policy is more appropriate.

1. The ’name’ parameter

Almost every Azure Policy I checked – if not all of them – contains the ’name’ parameter in the ‘policyRule’ - ’then’ section. The ’name’ field defines the name of the Diagnostic settings configuration for your Azure resources created by the Policy. Although some policies permit you to change this name as a policy parameter, in certain cases, it is hardcoded. Thus, to alter it, you must modify the policy itself.

"policyRule": {

"if": {

"field": "type",

"equals": "Microsoft.Storage/storageAccounts/fileServices"

},

"then": {

"effect": "[parameters('effect')]",

"details": {

"type": "Microsoft.Insights/diagnosticSettings",

"name": "[parameters('profileName')]",

"existenceCondition": {

"allOf": []

},

"roleDefinitionIds": [],

"deployment": {}

}

}

} The presence of the ’name’ field means that the policy will evaluate the Diagnostic settings only if the name of the settings and the name defined in the policy align.

But what if the resource is already configured correctly but has a different name? Most companies would consider this a compliant resource, but the built-in policy will evaluate it as non-compliant due to the name discrepancy.

If a resource is already set to forward logs to a defined destination with a specific name, creating a remediation task for a new diagnostic setting with a different name but with the same destination and overlapping logs will fail. You can only send a log type from one resource to one destination once via Diagnostic settings. So, the remediation task can fail in situations like this.

Deploying a new Diagnostic settings configuration with the same name will overwrite the existing configuration.

By simply removing the ’name’ line, you can resolve the issue of only evaluating Diagnostic settings with the same name. However, this condition can be beneficial in specific cases, so consider what is best for your environment before making any changes.

Issue with the name attribute: In many large environments, resources were flagged as non-compliant due to a configuration name mismatch between custom-named existing Diagnostic settings and newly created Policies. As a result, built-in policies couldn’t accurately assess compliance.

2. From logs.enabled to a More Granular Condition

Many Azure policies, including those available online, are typically act in an all-or-nothing way. This means you can either gather all logs or none. Additionally, resource compliance is often evaluated with this all-or-nothing approach in mind. In this case, either all StorageRead, StorageWrite, and StorageDelete logs are collected, or none are.

This all-or-nothing behavior results in two serious issues:

- Because the policy only allows all or nothing, you either collect and pay for all the logs —even those you don’t need— or you don’t get any at all. This creates both cost and visibility issues. (Collection issue)

- This setup can only evaluate entirely True or entirely False configurations. For a more granular evaluation, you need to enhance the existenceCondition part of the code. (Evaluation issue)

Here’s the code -from the sample policy- that assesses the log (event) collection of a resource within the built-in policy:

{

"existenceCondition": {

"allOf": [

{

"field": "Microsoft.Insights/diagnosticSettings/logs.enabled",

"equals": "[parameters('logsEnabled')]"

},

{

"field": "Microsoft.Insights/diagnosticSettings/metrics.enabled",

"equals": "[parameters('metricsEnabled')]"

},

{

"field": "Microsoft.Insights/diagnosticSettings/workspaceId",

"equals": "[parameters('logAnalytics')]"

}

]

}

} The logs.enabled field returns an array that we compare to a boolean value in the code. For Storage Accounts where Read and Write logs are enabled, but Delete logs are not, logs.enabled returns [’true’, ’true’, ‘false’].

Evaluation:

- The highlighted code returns ‘True’ if the ’equals’ parameter is ’true’ and all logs are set to be collected ([’true’,’true’,’true’]).

- It also returns ’true’ (compliant) if the parameter is ‘False’ and no logs are collected ([‘false’,‘false’,‘false’]).

Although the evaluation is correct, it’s important to note there can be scenarios where only some events are enabled, making the output of logs.enabled neither ’true’ nor ‘false’. This behavior does not affect the built-in policy.

To improve the behavior, add separate parameters for each log type instead of a single ’logsEnabled’ parameter. The new code should check each log type (read, write, delete) individually and compare their values with the policy settings. This way, the evaluation will be more accurate.

{

"count": {

"value": [

{

"category": "StorageRead",

"enabled": "[parameters('StorageRead')]"

},

{

"category": "StorageWrite",

"enabled": "[parameters('StorageWrite')]"

},

{

"category": "StorageDelete",

"enabled": "[parameters('StorageDelete')]"

}

],

"name": "logTypes",

"where": {

"count": {

"field": "Microsoft.Insights/diagnosticSettings/logs[*]",

"where": {

"allOf": [

{

"field": "Microsoft.Insights/diagnosticSettings/logs[*].enabled",

"equals": "[current('logTypes').enabled]"

},

{

"field": "Microsoft.Insights/diagnosticSettings/logs[*].category",

"equals": "[current('logTypes').category]"

}

]

}

},

"greater": 0

}

},

"equals": 3

} In the past, I’ve seen people attempt to address this issue by using the ‘in’ condition instead of ’equals.’ However, this approach will fail if Microsoft changes the order of event types or adds/removes any event type.

While one may want to collect all logs from a Storage Account, there are other Azure Resources where collecting all logs is unnecessary and too costly. Therefore, having the ability to selectively collect logs and evaluate resources accordingly is crucial in bigger environments.

Multi-purpose policy

The most typical requirement for log collection, from both a cost and coverage perspective, is to collect exactly the defined logs. No fewer logs, as that would create coverage issues, and no more logs, as that could lead to unnecessary costs.

As discussed earlier, some built-in policies fail to meet these requirements.

With the changes explained above, we can effectively solve this issue.

Another common requirement I’ve encountered prioritizes coverage over cost. In other words, companies want to ensure they collect all the logs required by a specific service or team, but having additional logs in their SIEM is not a problem (or specifically required). This is typical for larger environments. For example:

- You have a Sentinel instance configured and actively in use. You bring in an MSSP to monitor your environment. You want to ensure all logs the MSSP needs are collected, without dropping any logs you already collect and use. In this case, collecting everything the MSSP requires is essential, and having extra logs on top of this is also required.

- You manually configure some critical systems while ensuring you have some minimal logs from all resources. You can set a policy to collect the minimal logs and then manually adjust the Diagnostic settings to gather additional logs for the critical resources. This way, having extra logs from these resources is not an issue.

To address both scenarios with a single policy, we can add an EvaluationMethod filter to the policy’s ’existenceCondition’ section. This parameter can be set to either ‘Superset’ or ‘Matching’.

In ‘Matching’ mode, the policy operates as before, labeling resources as compliant only if they transmit the exact logs specified. In ‘Superset’ mode, the policy ignores log types marked as ‘False’, meaning it doesn’t matter if those logs are collected or not. In ‘Superset’ mode a resource is considered compliant if it collects at least the required logs, with additional logs being acceptable.

In ‘Matching’ mode, a ‘True’ policy configuration for an event type indicates that we want to collect those logs, while ‘False’ means we don’t want those logs. In ‘Superset’ mode, ‘True’ means we want the logs, and ‘False’ means we don’t care whether they are collected or not.

This table outlines the evaluation process (The resource is configured to send both StorageRead and StorageWrite logs, while the policy assignment requires only StorageRead logs. ):

| Log Type | Diagnostic settings | Policy Assignment Configuration | Matching mode evaluation | Superset mode evaluation | |

|---|---|---|---|---|---|

| StorageRead | True | True | True | True | |

| StorageWrite | True | False | False | True | |

| StorageDelete | False | False | True | True | |

| Overall Evaluation | False | True | |||

| Explanation | False - The resource is not compliant because StorageWrite is being collected, even though it is not required. Thus, the evaluation results in non-compliance. | True - The resource is compliant because each log type is evaluated as true. A log type is considered true if the Diagnostic settings configuration matches the policy assignment or if the log type is collected. Simply put, nothing unwanted is collected. |

Here is the modification of the evaluation logic (showing only the relevant parts):

{

"anyOf": [

{

"field": "Microsoft.Insights/diagnosticSettings/logs[*].enabled",

"equals": "[current('logTypes').enabled]"

},

{

"allOf": [

{

"value": "[parameters('EvaluationMethod')]",

"equals": "Superset"

},

{

"value": "[current('logTypes').enabled]",

"equals": false

}

]

}

]

}Summary

Keep in mind that further changes to the code are needed for it to work, such as adding new parameters and utilizing them. The provided code demonstrates the logic changes. The comparison shows that I not only updated the evaluation logic (removing the name parameter and adding evaluation tests) but also made several smaller modifications to leverage the new parameters and features.

Find the policy on my gitlab or check the comparison of the original and the modified code below:

Post Summary

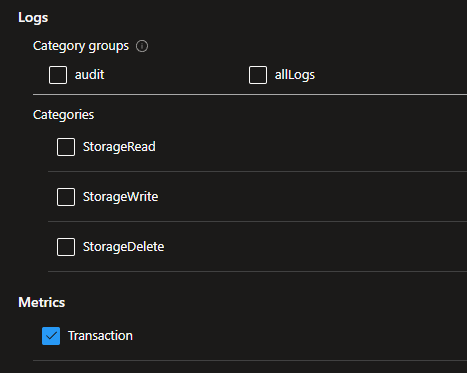

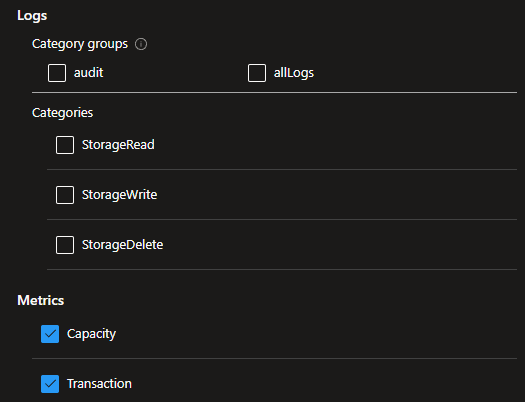

The configurations available on the GUI and via code can differ. For instance, in Storage Account - File Service logging, the built-in policy can enable AllMetrics, covering both Transaction and Capacity metrics. However, on the GUI, only Transaction metrics are visible when creating a new Diagnostic settings configuration.

This is what appears when you create a configuration from scratch on the GUI:

Here’s the configuration visible when you enable AllMetrics through the Azure Policy:

This means that ongoing review of created diagnostic settings and Azure policies is necessary.