Jupyter Notebooks are remarkably versatile tools, even within Microsoft Sentinel’s data lake where current capabilities are limited. While Microsoft frequently highlights historical threat intelligence correlation and long-term threat hunting as notebook use cases notebooks unlock far more practical possibilities.

In a previous post, I explored the theoretical foundations of using notebooks for advanced data pipelines in Microsoft Sentinel. This follow-up takes a different approach: focusing on pratical solutions that solve problems I’ve encountered in production environments. Many of these scenarios were simply impossible to address using only traditional Sentinel SIEM capabilities.

The use cases covered here tackle cost optimization, complex log routing, and advanced analytics workflows. Each represents a practical pattern you can adapt to your own environment rather than theoretical architecture. (!!!)

1. Commitment Tier Optimization Through Backfilling

The Challenge

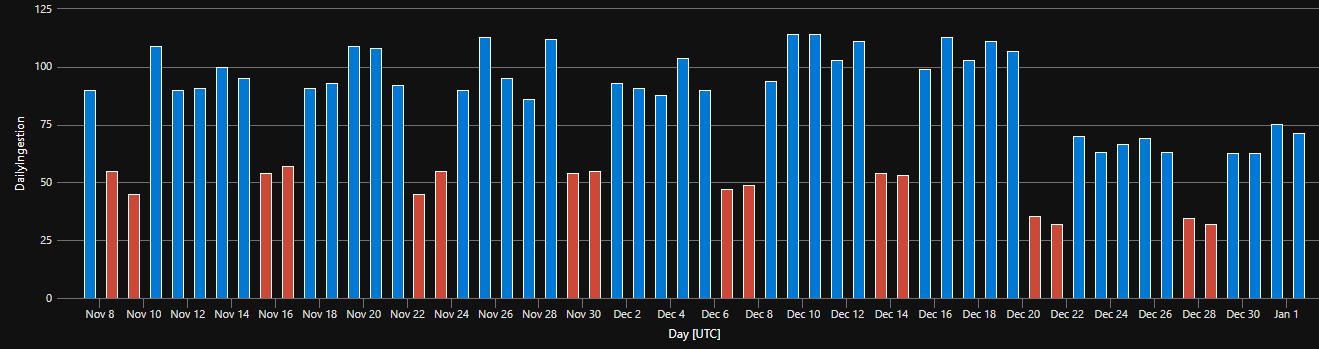

If your organization commits to a Commitment Tier, you’re charged the full fixed amount regardless of actual usage. Going over this limit will still create overage ingestion charges. During weekends, or off-business day, ingestion typically drops significantly. This unused headroom represents wasted money on a contractual commitment.

Typical ingestion pattern of a company during holidays

Typical ingestion pattern of a company during holidays

The opportunity: Use the data lake to store raw data that can be processed outside peak hours, filling that commitment tier gap without increasing PAYG costs. By strategically delaying lower-priority logs or summarizations until low-traffic windows, you shift expensive analytics tier costs to already-paid capacity. Effectively getting storage for free.

Use cases:

- Logs from test or dev environment can be good to have in the SIEM, but they are frequently not needed by real-time detections, so their ingestion to analytics can be delayed.

- Invenorying data: List of machines, accounts, vulnerabilities are freuqently reintroduced based on a schedule and only changes are ingested constantly. So these logs - just like the built-in IdentityInfo table - can be ingested during these gaps.

Workflow

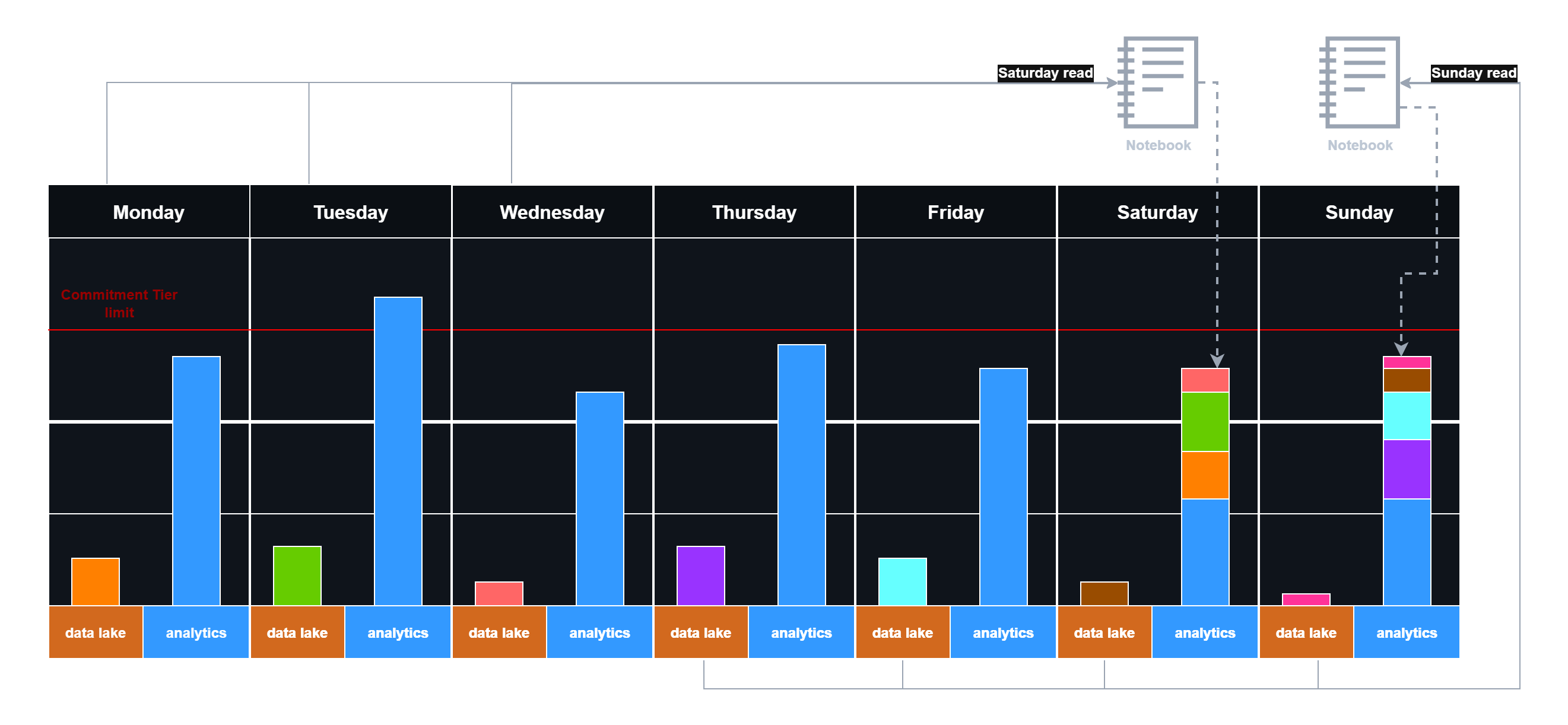

Off-days processing workflow:

- During business days: Ingest high-priority security events to the analytics tier as normal (threat detection, identity activity, authentication logs - blue in the diagram) but keep less important logs in data lake (various colors in the image)

- Weekend/night processing: Schedule notebooks to run when the commitment tier is underutilized - transforming and promoting low-priority data and aggregation to the analytics tier if needed (stacked columns in the image)

Delayed logging with weekend data reingestion

Delayed logging with weekend data reingestion

A potential inefficiency already appears in practice: Wednesday might have available commitment tier capacity, but logs don’t get pushed until the weekend because notebooks only check on weekends. You can accept this gap for simplicity, or create notebooks that process weekdays too - adding complexity but improving commitment tier utilization. The key takeaway is that commitment tier efficiency can be improved with the right strategy.

Use Case 2: Advanced Log Splitting Across Multiple Sentinels

The Challenge

Many organizations operate multiple Sentinel workspaces for various reasons. But some logs originate from shared infrastructure and might be hard to implement a DCR-based splitting logic. DCRs handle the logs individually while in some cases the logs themselfes do not have the info needed to decide into which Sentinel they should be stored.

The opportunity: In this case, one can create a staging Sentinel and use data lake tables to store the logs either as a source-of-truth (keep it here long-term) or as a temporary storage, and then process the logs via a Notebook and forward them into their final resting place. This can be done, because Notebook can read and write logs into different connected Sentinel instances.

For example:

- User authentication logs that must be split based on department, team membership, or organizational hierarchy before pushing to workspaces - so, data needs to be search in other tables.

- Cloud platform activity that needs to be segregated by an assigned tag that is not available in the resource logs.

Workflow

Notebooks can perform intelligent log splitting based on data enriched from various workspaces and tables, then write results back to a separate analytics tables or push to different Sentinel workspaces.

Log splitting workflow:

- Ingest all raw logs to the data lake (no filtering at ingestion time)

- Run a notebook that:

- Loads raw logs and enrichment data (user identity tables, organizational lookups, resource mappings)

- Joins logs with context (e.g., user’s department from IdentityInfo table)

- Filters and splits data as needed

- Writes results back to analytics tables

Use Case 3: Behavioral Aggregation for Cloud Logs

The Challenge

Some log sources can generate trendemous amount of logs sometimes being too redundant or too detailed. When a user performs a single action through the GUI it can result in multiple logs.

This generates 4-5+ separate log records for a single action. Multiply this across thousands of users and numerous cloud operations, and the analytics tier becomes bloated with repetitive, low-fidelity data. This inflates ingestion costs and makes threat detection noisier.

Behavioral aggregation Instead of keeping all the raw logs, you could identify patterns in your environment. When you find a pattern, you can easily replace the underlying logs with an activity event. When there is no pattern match for an event, you can just ingestion it as a raw log.

For example consider how firewall logs and network packets are related to each other. On network layer-level you have tons of packets during a TCP handshake, TLS build out and the real communcation. On the firewall-level all these packets are translated into a single or few Connection started, Connection blocked, Teardown, etc logs.

Having a firewall connection started and teardown events with aggregated information (e.g traffic size) are much smaller than keeping all the packets as events in a SIEM one-by-one.