Lately, each month, I see a new feature from Microsoft that I think is going to be a game-changer for Sentinel. And again, there is a new one that was introduced a month ago, and I think it could be a feature that moves Microsoft’s SIEM to the next level. This feature is called the Ingestion-Time Data Transformation. With this feature, you can easily enrich your logs with additional data or filter the ingested events and fields, which can help you save on the ingestion and retention costs.

Usually, I create posts to solve some frequently mentioned problems or to work on challenging tasks that have not been solved yet. This post is not going to be like that. I did not write any articles lately, so I decided to work on one which is simple to put together but can help a lot of people and companies save some money.

Microsoft just came out with the new Ingestion-Time Data Transformation functionality pretty recently. This feature still has a lot of limitations, regarding the KQL functions you can use in it. Also, even though it is already in public preview, you still can’t get access to it by default. To get access to the feature on the GUI you have to ask Microsoft to enable it for you by filling out this form.

Ingestion-Time Data Transformation

You can read about it on the above-linked page, but here are a few words about it. With Ingestion-Time Data Transformation you can modify the ingested data at ingestion-time. Simple, right? This means the modified data is going to be stored in the table instead of the originally forwarded information. This can be used to:

- Add information to an event, like the department information of a user.

- Remove an unnecessary or redundant field from an event.

- Drop an unnecessary event completely.

And to do all these things with easy-to-use KQL queries. If you have used KQL queries in the past for investigation or rule creation, then you will be able to create a Data Transformation query just as easily.

Usage

You can check Microsoft’s page to see how to create a Transformation rule, but I also recorded a quick gif myself to show you one of the rules I’m going to explain later on in this blog post.

The ‘Tuning (Preview)’ tab is only visible if Microsoft has enabled it for you already.

Tuning

I’m going to show some fine-tuning options almost everybody can use to decrease the amount of the ingested data. But before you drop any fields, please check whether your SOC uses that field during investigations or whether any of your Analytics rules rely on the defined fields.

While the fields I am dropping in these examples are usually redundant or not needed, in some situations they can be required.

1. Filter Windows Event logs

Windows Event logs are usually among the top 10 most talkative logs for a company. But the cost of these logs really depends on what you actually collect and from which machine you gather them. If you forward Windows Events from all the servers and workstations (with MMA agent) and you configure the collection to pick up all the existing Windows Events, then you are going to pay a ton of money. In this case, you should really consider using an Ingestion-Time Data Transformation Rule.

Some fields to drop:

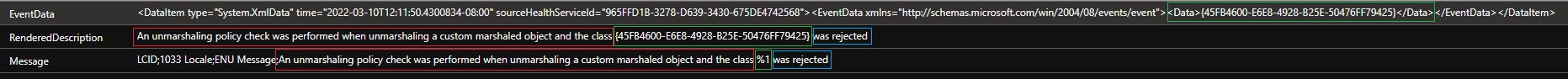

- RenderedDescription: There is one really big field in this log, and it is the RenderedDescription field. This field is redundant and even without it, the log makes perfect sense. It can help during an investigation to have the full description so the Analysts can quickly understand what is happening. But again, this is not needed for rules, and you can completely understand the meaning of an event without it. The RenderedDescription can be dynamically put together based on the ‘Message’ and the ‘EventData’ fields. I color-coded the values on the image to show how the RenderedDescription field is calculated:

- Message: The Message field contains the structure of the RenderedDescription. If you need the included information, you can keep this field. However, if you can solely rely on the EventId in an event, or you can put together the RenderedDescription information (if you need it) without the Message field, then you won’t need this data. It depends on your needs.

Let’s see the size of these fields compared to the whole events:

Event

| where TimeGenerated > ago(60d)

| extend whole_size = estimate_data_size(*), message_size = estimate_data_size(Message),

rendereddescription_size = estimate_data_size(RenderedDescription)

| summarize ['Message size in % compared to the whole message'] = 100.0 / (sum(whole_size)*1. / sum(message_size)*1.),

['RenderedDescription size in % compared to the whole message'] = 100.0 / (sum(whole_size)*1. / sum(rendereddescription_size)*1.) With this query you can check the size of the Message and the RenderedDescription field compared to the size of the whole event (for all the events together):

As you can see, by dropping the Message field you can decrease the log size by 10% and by dropping the RenderedDescription field, you can decrease the event size by 26% on average. Even if you need the Message field, getting rid of the RenderedDescription is still a good idea.

So, if your logs are like mines, you can save 36% of ingestion and retention cost with this rule.

And here is the query I used to achieve this:

source

| project-away RenderedDescription, Message The ‘source’ keyword refers to the table under the transformation (in this case, the Event table), and the next line is there to drop some fields.

2. Filter Windows Security Event logs

This table is usually one of the three most talkative log sources in an environment, based on my experience. Usually, the CommonSecurityLog and Syslog tables are in front of it. But it is important to point out that those tables can contain multiple network devices and other Syslog-based log sources.

Again, just because I do not use some of the fields in the logs does not mean they are not useful to you. So please, before dropping any fields, check your SOC processes and Analytics rules.

So, some of the fields I think can be dropped most of the time:

-

Activity: The Activity field is an enriched version of the EventID field. For example, when the EventID field contains “4688” the Activity contains “4688 - A new process has been created.”. The field is redundant and can be dropped. You can easily look this information up from a watchlist.

-

Channel: Always ‘Security’. Thus, not needed. (I saw some AppLocker values here as well, but the field is still not needed.)

-

EventOriginId: This field is added by the monitoring agent. Most of the time, it is not useful from a security perspective.

-

EventSourceName: Name of the event provider. It can be deducted from the event id and is also not needed for security investigations.

-

ManagementGroupName: Name of the management group for System Center Operations Manager agents. For other agents, this value is AOI-<workspace ID>. Added by the agent, it can be dropped. The same field can be dropped from the Event table as well.

-

MG: This value seems to be the ID of the ManagementGroup (not confirmed). Added by the agent. The same field can be removed from the Event table too.

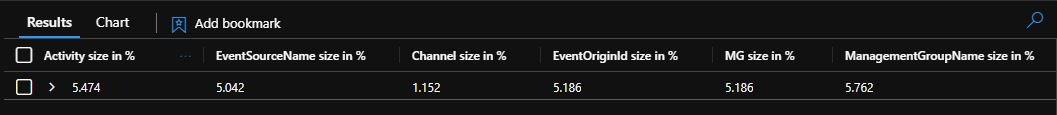

These fields are usually not required. And while one by one they are small, together they take up a large amount of space in a log. In the following image, you can see the average size of these fields compared to the entire SecurityEvent logs in my tenant:

All the fields together account for approximately 28% of all the logs. Considering that the SecurityEvent table is one of the noisiest ones, you should take a look at your environment, and maybe filter out some of the fields. This can save a lot of money.

You can use the following query as a Data Transformation query:

source

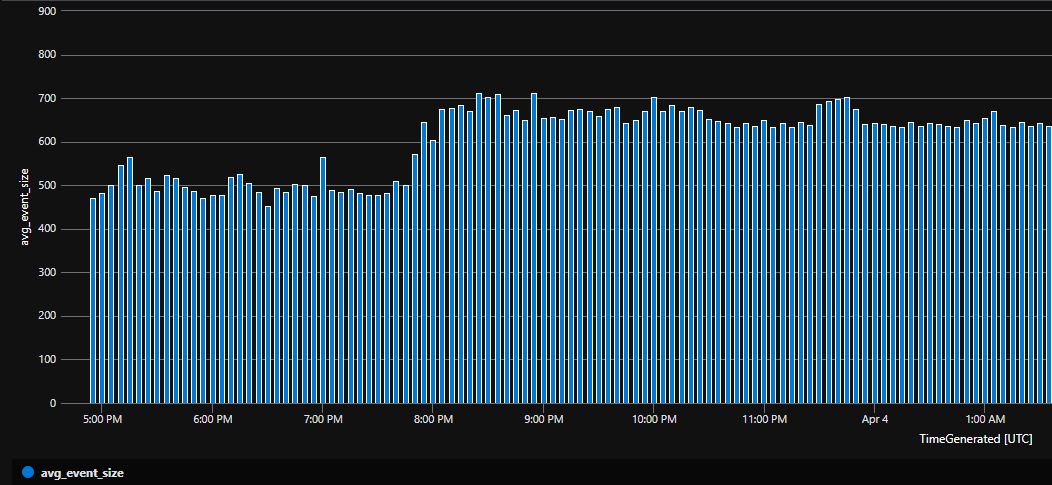

| project-away Activity, EventSourceName, Channel, EventOriginId, MG, ManagementGroupNameI had this filter enabled at the time of creating the blog post. You can see the increase in the average event size (from ~500 bytes to ~650 bytes) after removing the filter:

Results

As you can see, dropping these fields during ingestion can save a lot of space and cost. But obviously, you should also consider the negative implications.

Since this solution is only enabled if you request it for your tenant/subscription, I assume it is still not in a production-ready state. Even though it is already in public preview.

One implication I was specifically interested in was the ingestion delay change after deploying a Data Transformation rule. Well, apparently the simple rules I deployed did not affect the ingestion delay measurably. I monitored this over multiple days and while sometimes I could see an increase in the ingestion delay (from 22 seconds to 36 seconds) in other situations it actually decreased the ingestion delay by a few seconds.

My Rules were pretty lightweight, so maybe the ingestion delay will be affected more significantly in case of a heavy Data Transformation Rule.

Some other filtering / enrichment worth looking at

-

IP Geolocation enrichment: While this use case seems to be the most straightforward one, it is not that easy to implement due to the limitations of the Ingestion-Time Data Transformation rules. Like you can use externaldata operator in it.

-

Pre-filtering and dropping SQL Server audit logs. This log is extremely noisy and hard to process effectively. Most of the time, I can’t even recommend forwarding these logs to Sentinel with the MMA agent. But now, you have the option to filter and modify this log type.

-

Firewall and network device log filtering can also be a good idea to do in Sentinel. In the past, you had to modify some of the events on the network device itself (for example by utilizing a custom CEF template), or you had to do the filtering with Syslog or LogStash config files. Having a unified solution can make this process much more convenient for an MSSP who must deal with various tools and network configurations.