Managing logs in a SIEM environment can be challenging, especially when aiming to design a solution that is extensible, highly available, future-proof, and reasonably priced. In this third installment of the ‘Powerful Capabilities of Data Collection Rules’ series, I show how the ‘Event Hubs-to-DCR’ preview pipeline turns a standard Event Hubs into a scalable, cost-effective log funnel for Sentinel, enabling filtering, enrichment, and log flow control.

Microsoft’s Deployment Guide

Microsoft already provides an excellent, detailed step-by-step guide for deploying Event Hub–to–Microsoft Sentinel pipelines. If you are looking for specific deployment instructions, I recommend checking out their documentation, which covers every technical step of the deployment.

Instead of repeating the steps, this post is geared toward those looking to go beyond the basics. Rather than walk through another deployment how-to, I will focus on why you should choose this architecture, what benefits it provides, and how you can leverage this setup to make your log pipeline more effective, resilient, and cost-optimized.

Beyond Deployment: Design and Practical Considerations

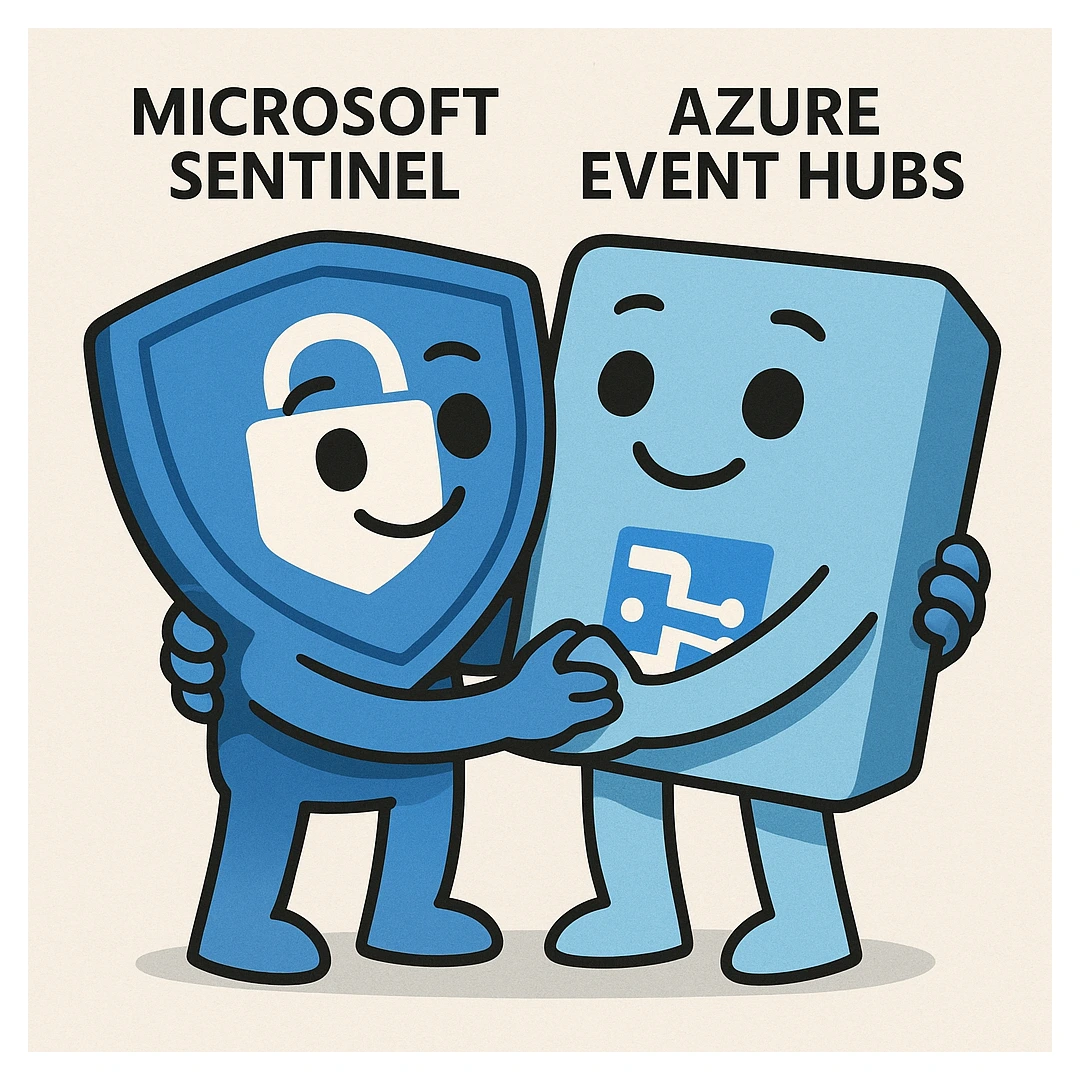

This preview DCR feature enables you to directly read and process Event Hubs content, making it straightforward to connect an Event Hubs to Sentinel and forward security-related data.

Preview DCR feature

Preview DCR feature

The built-in Event Hubs data connector doesn’t ingest your event data - it only monitors the hub’s operational behavior (diagnostic settings). So, this DCR approach and the connector are complementary, not overlapping.

At first glance, this might not sound revolutionary. After all, Event Hubs are just a passthrough. Everything in them was already sent there by another service or application. So, why bother with this approach when there are other connectors, agents, and APIs available?

The answer lies in flexibility, scalability, and control. Azure Event Hubs is a highly scalable and reliable data streaming platform designed for ingesting large volumes of event and telemetry data. It enables efficient collection and processing of log data from various sources, making it an ideal component in modern log ingestion pipelines. The Event Hubs-to-DCR feature lets you use Azure Event Hubs in your ingestion pipeline for Sentinel, so you can treat any system that can emit logs as a first-class citizen in your security operations.

Below, you can find the advantages of leveraging Event Hubs categorized in multiple groups for clarity:

- Event Hubs as a Native Destination

- Event Hubs as a Source for Multiple Consumers

- Event Hubs for Azure PaaS Resources

- General Advantages of Event Hubs as a log relay

1. Event Hubs as a Native Destination

Since this preview DCR feature allows one to pick up logs from an Event Hubs and forward it to Sentinel, it can be valuable to understand why Event Hubs could be a good choice of destination for other log sources - aka working as a relay between a log source and your SIEM from the log sources’ perspective.

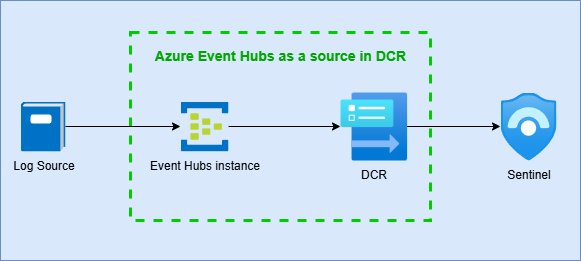

- Broad Ecosystem & Out-of-the-Box Integrations: Numerous third-party (non-Azure) log sources and data pipeline solutions support Event Hubs as a plug-and-play sink, meaning no custom connector will be needed for your Sentinel, accelerating onboarding timelines.

- Drop-in replacement for Kafka brokers: Even when Azure Event Hubs is not directly supported, Event Hubs exposes a Kafka-compatible endpoint (not the Basic SKU), so any solution supporting Kafka can be used with Azure Event Hubs without code change. Apache Kafka is a frequently used and often supported data pipeline solution, so this DCR feature provides a connector solution for many sources.

- Easy migration from Kafka: Since it is really similar to Kafka, even creating a net new connector for a log source to Event Hubs that already has a connector to Kafka requires only a minimal code change.

Lots of log sources support Event Hubs or Kafka as a logging destination, so a dedicated Sentinel connector might not be needed.

Lots of log sources support Event Hubs or Kafka as a logging destination, so a dedicated Sentinel connector might not be needed.

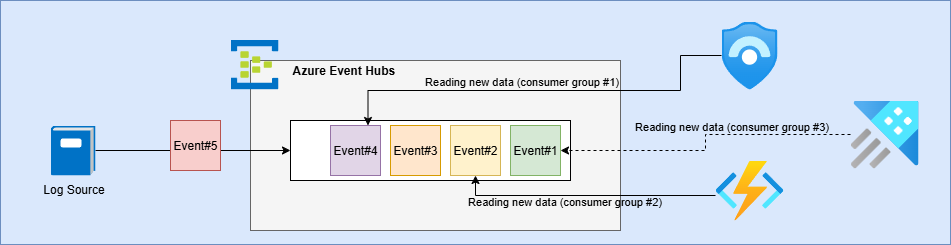

2. Event Hubs as a Source for Multiple Consumers

Using an Event Hub instance to store and forward data offers several advantages:

- Independent view by different consumers: Multiple analytics platforms - such as Sentinel, Azure Data Explorer, and third-party logging solutions - can independently access and analyze the same Event Hub data stream without implementing any re-ingestion or duplication logic.

- This approach can reduce costs, as the original log source only needs to forward the data once.

- Additionally, it guarantees a consistent, one-to-one copy of the logs, ensuring all consumers process the exact same data set.

- Fan-out architecture built-in: One published event can be delivered to any number of consumers by creating new consumer groups in Event Hubs. Adding new downstream tools is a zero-disruption operation that never impacts existing readers. In contrast, traditional data pipeline tools like syslog-ng often require a complete restart when introducing new consumers, which can risk log loss. With Azure Event Hubs, you can seamlessly add or remove consumers without affecting the operation of other connected systems.

Adding a new consumer has no impact on existing consumers. By creating a new consumer group, the new reader can begin reading from the start and independently track its own progress. Each consumer group maintains its own progress state.

Adding a new consumer has no impact on existing consumers. By creating a new consumer group, the new reader can begin reading from the start and independently track its own progress. Each consumer group maintains its own progress state.

An architecture like this not only supports easy extension of your logging infrastructure but also prepares your logging infrastructure for a zero-loss, zero-downtime migration if you decide to move away from your SIEM - effectively future-proofing your system.

3. Event Hubs for Azure PaaS Resources

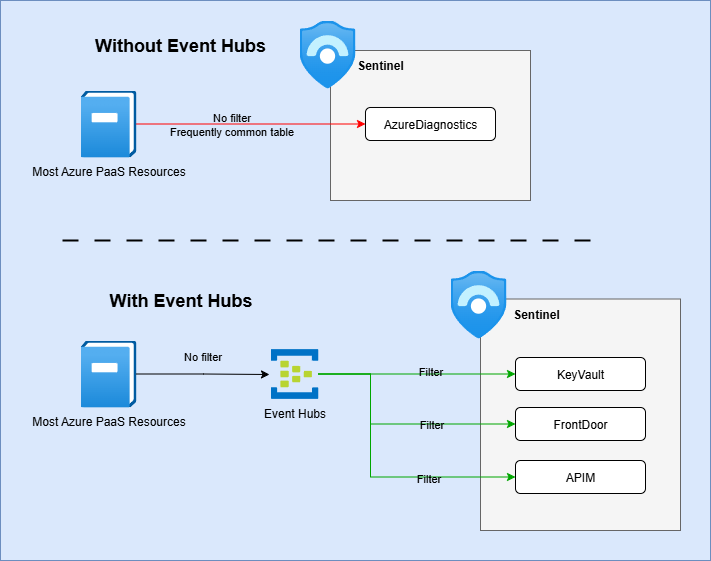

Azure PaaS cloud resources are often targeted by attackers, yet they frequently remain unmonitored in Sentinel. A primary reason these logs aren’t collected is the potential high costs since Sentinel cannot natively filter out unnecessary events. By using the Event Hubs as a source in Data Collection Rules, organizations gain far greater control over which log data from PaaS sources is ingested into Sentinel, enabling smarter, more cost-effective security monitoring.

- Enhanced Filtering and Transformation: Most Azure PaaS diagnostic-to-workspace connectors let you choose log categories, but cannot filter or drop individual log rows or fields. In contrast, Event Hubs → DCR pipelines support Kusto-based ingestion-time transforms. People frequently don’t onboard their cloud logs due to the huge amount of unfilterable data, so this option gives them an opportunity to still have some visibility for a lower cost.

- Table customization: Currently, many Azure PaaS resources only write to the common AzureDiagnostics table instead of dedicated tables. As a result, all your PaaS data is consolidated into a single table, which makes implementing granular access controls, retention policies, and table tiering challenging or even impossible. By routing this data through Event Hubs, you can forward logs into separate custom tables, giving you full control over their management.

Azure PaaS resources vary in their integration capabilities with Sentinel. Some provide dedicated tables, while others do not, and filtering options are often unavailable. The diagram is intended to illustrate general capabilities only.

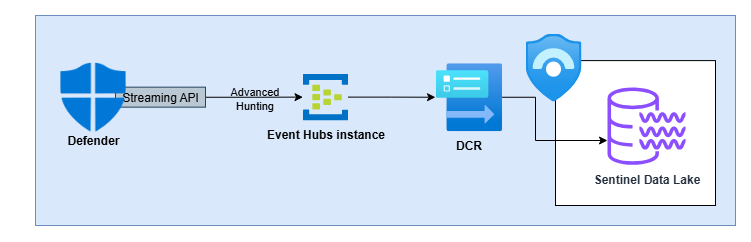

Azure PaaS resources vary in their integration capabilities with Sentinel. Some provide dedicated tables, while others do not, and filtering options are often unavailable. The diagram is intended to illustrate general capabilities only. - Defender Advanced Hunting data: Not PaaS, but… You can efficiently export Defender Advanced Hunting data to Event Hubs and collect it using this DCR type. From there, the data can be routed to ‘Auxiliary’ or ‘Data Lake’ tables (if Sentinel Data Lake is enabed) at a lower cost, rather than ingesting it directly into Sentinel SIEM Analytics tier.

*Sending Defender Advanced Hunting data to Sentinel Data Lake without paying the Analytics ingestion.

*Sending Defender Advanced Hunting data to Sentinel Data Lake without paying the Analytics ingestion.

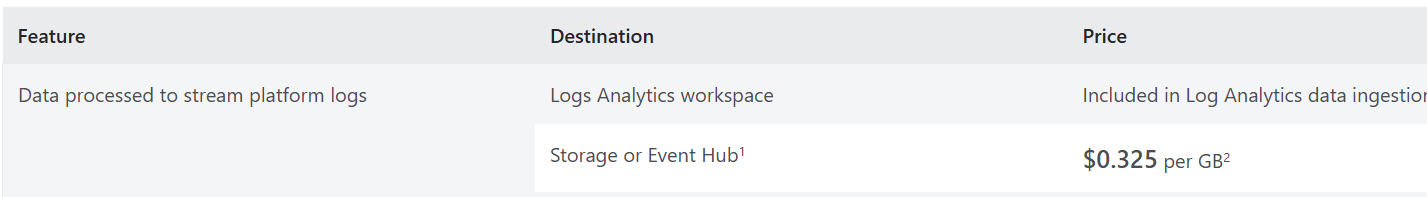

When you send data directly from a PaaS resource to Sentinel, your only cost is Sentinel ingestion. However, if you use Event Hubs as log relay, you also incur Event Hubs usage charges and pay an additional export fee for pushing data from the PaaS resource into Event Hubs. So, from a cost perspective this is only a good choice if you implement some filters.

The cost of Platform Log Export

The cost of Platform Log Export

Azure Monitor often adds extra enrichment data to logs. If you forward logs through Event Hubs, this additional information will be omitted. Additionally, normalization won’t be applied, so the log format may be different than expected.

4. General Advantages of Event Hubs as a log relay

There are some general attributes of Azure Event Hubs that a logging pipeline can benefit from.

- Decoupling Producers and Consumers: Event Hubs decouples data emitters from processors. Producers simply push events; consumers independently pull at their own pace. This separation increases resilience, so for example a Sentinel downtime won’t immediately result in a log loss. The service provides message retention for up to 7 days (for Standard Event Hubs), allowing downstream consumers to recover from temporary outages.

- Disaster Recovery / Backup setup: A disaster recovery or backup setup can also be established. For example, a separate consumer group can be created for a DR tool that only reads and stores data when the main consumers are unavailable. With appropriate caching settings, Event Hubs can retain data for an extended period, although typically, 24 hours is sufficient for most logging pipelines.

Limitations and disadvantages

While this preview feature brings significant flexibility and resilience to your logging pipeline, there are several important drawbacks to weigh:

- Preview-stage volatility: Because the Event Hubs–as-source DCR is still in preview, its behavior and API may change without notice, plus you may encounter bugs. (I did)

- Commitment-tier or dedicated-cluster requirement: To use this capability, your Sentinel workspace must be on a Commitment Tier or attached to a dedicated ingestion cluster. For small-to-mid-sized organizations, this can be cost-prohibitive because it typically makes sense only if you’re ingesting on the order of 100 GB/day or more.

- Additional charges: When you send data directly from a PaaS resource to Sentinel, your only cost is Sentinel ingestion. However, if you use Event Hubs as log relay, you also incur Event Hubs usage charges and pay an additional export fee for pushing data from the PaaS resource into Event Hubs. So, from a cost perspective, this is only a good choice if you implement some filters.

- Limited Region Availability: As this is a private preview not all the Azure regions are available yet. Read the documentation to find out which regions are supported.

Event Hubs as a relay

In summary, incorporating Event Hubs into your log ingestion pipeline offers significant advantages and now supports integration with Sentinel as well. By using this tool, you can build a solution that delivers the following benefits:

- A highly scalable and resilient infrastructure

- Support for many log sources, even when direct Sentinel data connectors are unavailable

- A flexible architecture where consumers can be added or removed at any time without impacting others (for example, adding a backup consumer alongside your SIEM)

- The ability to apply filtering to data sources that previously lacked this capability in Sentinel

- Thanks to caching and decoupling data publishers and consumers, it creates a highly available system that allows consumers to recover from downtime without data loss

- Enables the setup of custom high availability and disaster recovery configurations