In the last post, we looked at the ‘Direct’ DCR that simplifies API-based data ingestion. Today, we’re looking at the AgentDirectToStore Data Collection Rule type, which gives you more options for where to send your data.

The ‘AgentDirectToStore’ DCR lets the Azure Monitor Agent collect Azure VM data and send it straight to Azure Storage (Blob or Table) or Event Hubs - skipping Log Analytics entirely. This route gives you more control over where your monitoring data lands.

Retirement notice: Send data to Event Hubs and Storage (Preview) will be retire on 31 July 2026

Although the DCR has strict limitations today, it is an excellent fit for simpler environments and smaller organizations. If you need an all-in-one solution for Azure VMs that avoids managing complex data pipelines or third-party tools, this DCR can streamline your workflow.

Read another episode of this four-part series:

- Direct: In the previous post, I focused on the Direct DCR type, which streamlines log collection configuration for API-based sources.

- AgentDirectToStore: In this latest post, I demonstrate how to use this DCR to natively send events from your Azure VMs to a Storage Account or Event Hub.

- Event Hub as a Log Relay: The third installment of this article series explores the advantages and disadvantages of utilizing Event Hub as a log relay to Sentinel - a capability now supported by DCRs.

Upcoming

AgentDirectToStore DCR for Azure VMs

The ‘AgentDirectToStore’ Data Collection Rule (DCR) is a special type of DCR that routes data collected by the Azure Monitor Agent directly to Azure Storage - either to a Blob container or a Table storage - or to Azure Event Hubs, rather than sending it to a Log Analytics workspace.

This approach is especially useful when you want to archive telemetry for long-term retention, store less-critical monitoring data in cost-effective storage, or connect VM-hosted logs with external systems for real-time processing and analytics. By supporting these alternative destinations, AgentDirectToStore DCRs provide added flexibility for organizations looking to simplify their monitoring workflows and integrate with a wider range of data pipelines.

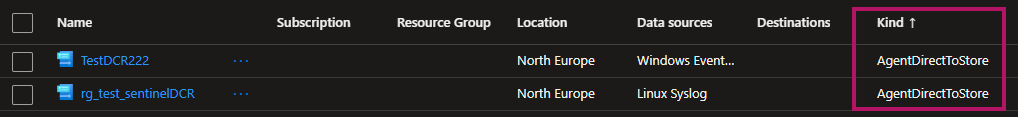

AgentDirectToStore DCRs on the GUI

AgentDirectToStore DCRs on the GUI

Constraints

Although the ‘AgentDirectToStore’ DCR provides unique data routing capabilities, its limitations make it unsuitable for most production scenarios. For full details, refer to the official Microsoft portal.

Here are the most important constraints:

- Azure VM Only: This DCR works exclusively with Azure VMs; Microsoft has stated there are no plans to support Arc-enabled machines.

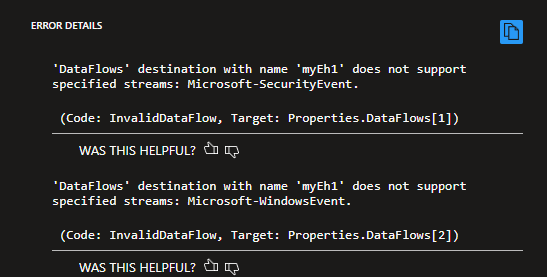

- Windows Event Log Structure: While you can collect Windows Event logs, the SecurityEvent and WindowsEvent (WEF) structures are not available. All logs arrive in the native Event log format.

Unsupported Windows Security Event schema

Unsupported Windows Security Event schema - Syslog Format: Syslog is supported, but not in the CEF (CommonSecurityLog) format. All Syslog-based sources are ingested as raw Syslog, which can limit downstream processing and compatibility.

- No GUI support: Although it isn’t a problem for most users, you currently can’t deploy this DCR through the GUI - you must use an ARM template.

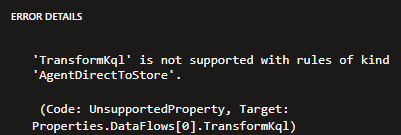

- No transformKQL: Data transformation via KQL queries in this DCR type is not supported.

No support for transformKql

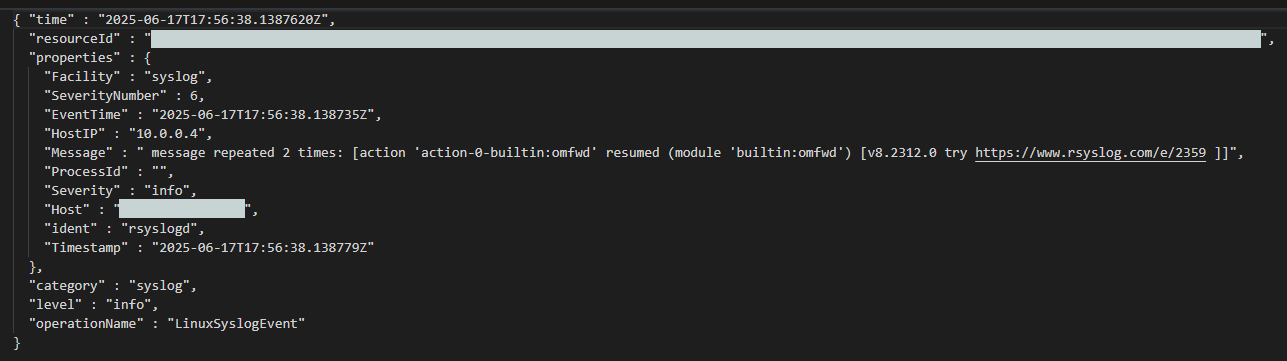

No support for transformKql - Different format: The logs will have a slightly different format than what you are used to in Sentinel. This could require some queries to be rewritten.

Syslog log format in Event Hub - see the Message field instead of SyslogMessage

Syslog log format in Event Hub - see the Message field instead of SyslogMessage

Scenarios

While not perfect, this DCR can deliver benefits in situations where its constraints pose no issue:

- Azure-Hosted Log Collectors: Many organizations already run collector VMs (such as Syslog collectors) in Azure. These machines often handle large log volumes, and since this DCR is already available, it can be utilized for this big dataset right away. AgentDirectToStore DCRs can forward all these noisy logs to a Storage Account or Event Hub (and subsequently to Azure Data Explorer) without complex or custom setups.

- Simpler Pipelines: Currently, most companies use Logstash or similar tools to send data to ADX. While these tools offer flexibility, they require additional management. The AgentDirectToStore DCR offers a simpler - though more limited - alternative for direct event forwarding. Smaller companies benefit from managing fewer tools - no Logstash or Fluent Bit - while still gaining cloud-based administration.

- Compliance and Data Lakes: When logs are retained for compliance or data lake purposes, the precise format is less important. In these scenarios, the absence of pre-parsing and the event format limits are usually acceptable trade-offs.

Note: The log format will differ from what you’d see in standard Log Analytics tables. Some enrichment data may be missing, and field names could vary (for example, Syslog messages may lack fields like SyslogMessage or TimeGenerated). So, do not consider this a one-to-one copy of data in Sentinel.

Cloud-managed Azure Log collectors

Despite its limits - and its focus on testing scenarios or lightweight deployments - this DCR type can be utilized as a low-cost, cloud-managed storage option for small companies that want to shift complexity off-premises. For Azure-based collectors, this DCR can redirect high-volume, low-security-value network logs (routers, switches, access points) away from costly Sentinel storage. Instead, the data moves to Event Hubs and then ADX for long-term, non-detection queries, while analysts can still pull extra context when required.

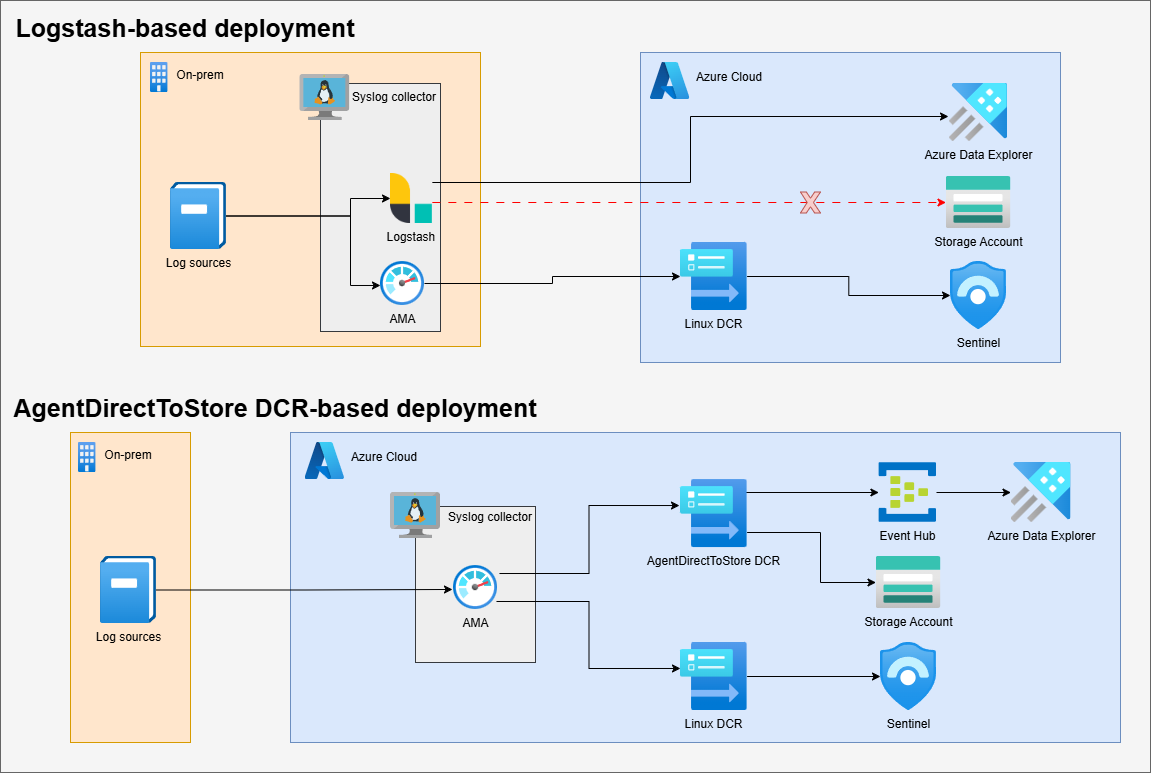

If you are already comfortable with Logstash or require support for on-premises machines, there are currently few advantages for you, as shown below:

Comparison of the two designs

Comparison of the two designs

Adopting this DCR does not exactly simplify things compared to Logstash; it merely shifts the complexity to the cloud by adding a new DCR and an Event Hub. However, for many situations (e.g., for MSSPs without direct access to a collector VM) handling this complexity in the cloud solves a range of challenges. Also, DCRs and Event Hubs are core Azure services that most cloud engineers already know.

Deployment steps

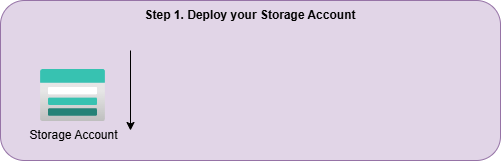

If you plan to use or simply test this DCR, follow these steps:

- Create Destination Storage: Set up your Event Hub namespace and instance, or Storage Account to be used as the destination. Ensure the destination exists before proceeding; otherwise, the AgentDirectToStore deployment will fail.

Deployment step 1

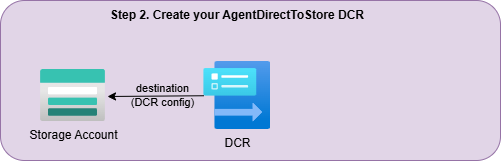

Deployment step 1 - Create the DCR: Deploy the AgentDirectToStore DCR. You can use the sample code in my gitlab repo. At this time, the GUI cannot be used to create this DCR, so your best option is to utilize the ARM template.

Deployment step 2

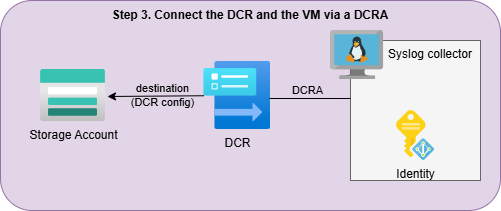

Deployment step 2 - Associate the VM: Connect the VM to the DCR using a Data Collection Rule Association (DCRA). You can use any method to do this. For testing you can use my simple ARM template.

Deployment step 3

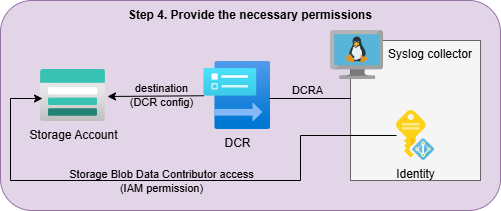

Deployment step 3 - Assign Permissions: Grant the VM’s (user or system) managed identity the necessary roles, depending on your destination. You can do this on the GUI by going to the IAM page of your resources (Event Hub or Storage Account) and providing one of the following permissions to the identity:

- Storage Table Data Contributor (for table storage)

- Storage Blob Data Contributor (for blob storage)

- Azure Event Hubs Data Sender (for Event Hubs)

Deployment step 4

Deployment step 4

Alternative Destination Capabilities

The ‘AgentDirectToStore’ DCR from Microsoft currently has several notable limitations, as previously mentioned, which often restrict its practical application. However, looking ahead, there are promising signs of future improvements. The DCR API documentation mentions some features that could become valuable over time, listing several destination types that are not yet supported by general Linux or Windows log collection DCRs.

At present, some of these destinations are available only in specific scenarios, like metric collection with the PlatformMetrics DCR type. However, there are indications that Microsoft intends to expand these options. During testing, I received a ‘private preview’ error while deploying DCRs. This suggests Microsoft is actively working on new storage options that will eventually support resources beyond Azure VMs, though these features are not yet available to the public.

Interesting storage destinations in the API documentation.

Interesting storage destinations in the API documentation.

Microsoft’s general log collection pipeline with Event Hub, ADX, or Storage Account integration capabilities could easily push many third-party telemetry collectors and processors aside for many projects. I’m genuinely excited to see what Microsoft introduces next in this area.

Continuing the series

If you are interested in learning about some other lesser-known aspects of DCRs, check out another article in this four-part series:

- Direct DCRs: In the previous post, I focused on the Direct DCR type, which streamlines log collection configuration for API-based sources.

- AgentDirectToStore DCRs: In this latest post, I demonstrate how to use this DCR to natively send events from your Azure VMs to a Storage Account or Event Hub.

- Event Hub as a Log Relay: The third installment of this article series explores the advantages and disadvantages of utilizing Event Hub as a log relay to Sentinel - a capability now supported by DCRs.

- Coming soon